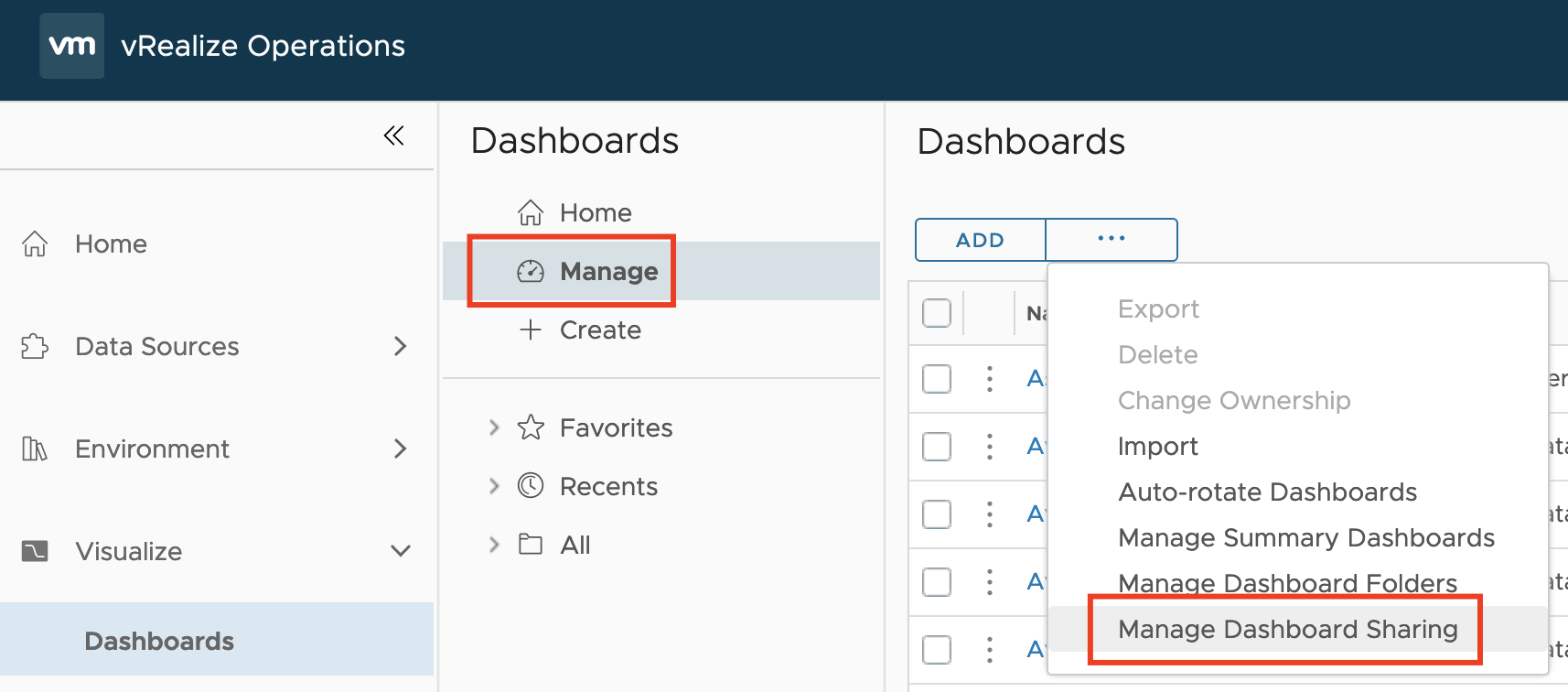

Another question I was asked during my “Meet the Expert – Creating Custom Dashboards” session which I could not answer due to the limited time was: “How to manage access permissions to Aria Operations Dashboards in a way that will allow only specific group of content admins to edit only specific group of dashboards?“ Even …

Category: vRealize Operations

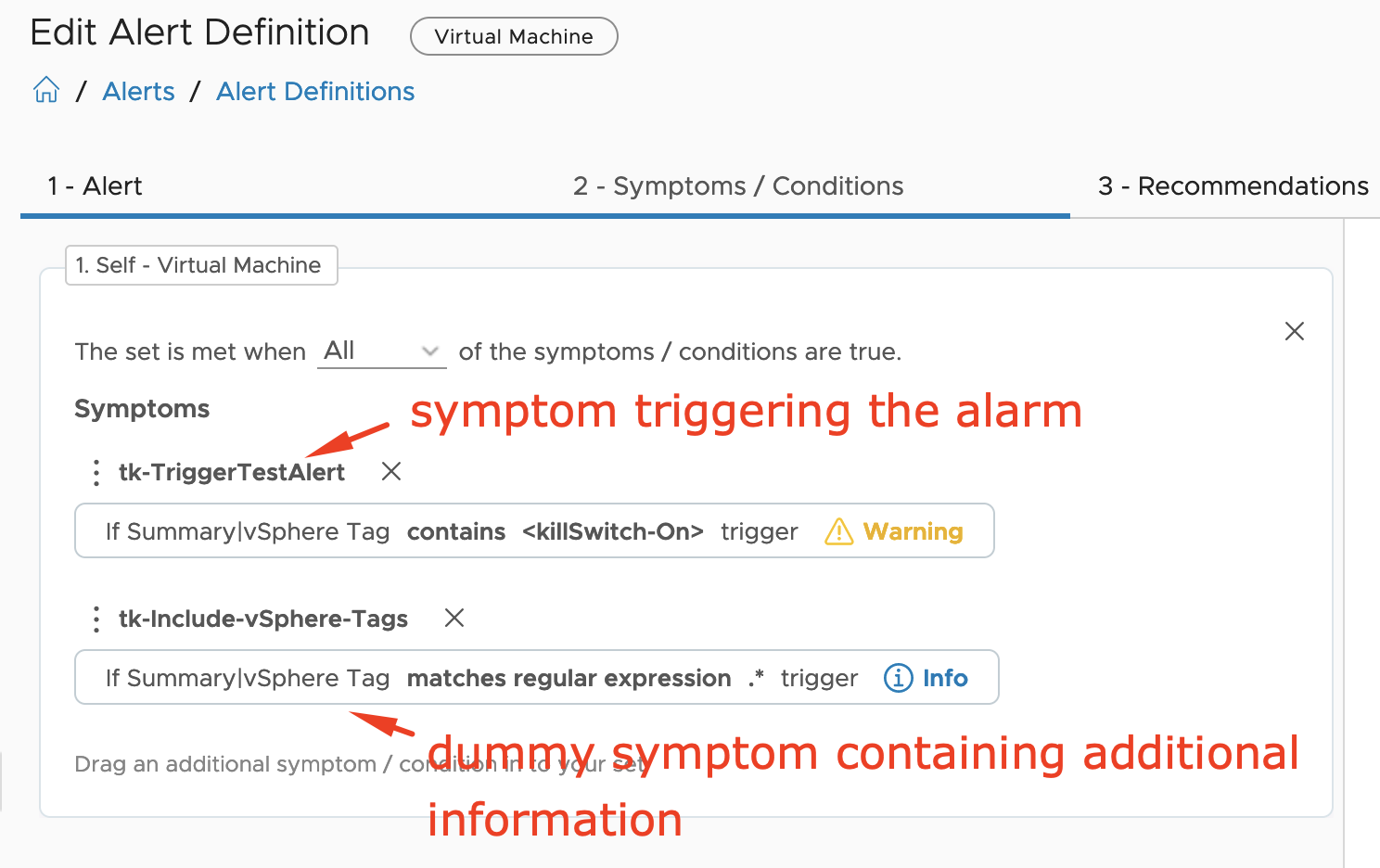

VMware Explore Follow-up – Aria Operations and SNMP Traps

During one of my “Meet the Expert” sessions this year in Barcelona I was asked if there is an easy way to use SNMP traps as Aria Operations Notification and let the SNMP trap receiver decide what to do with the trap based on included information except for the alert definition, object type or object …

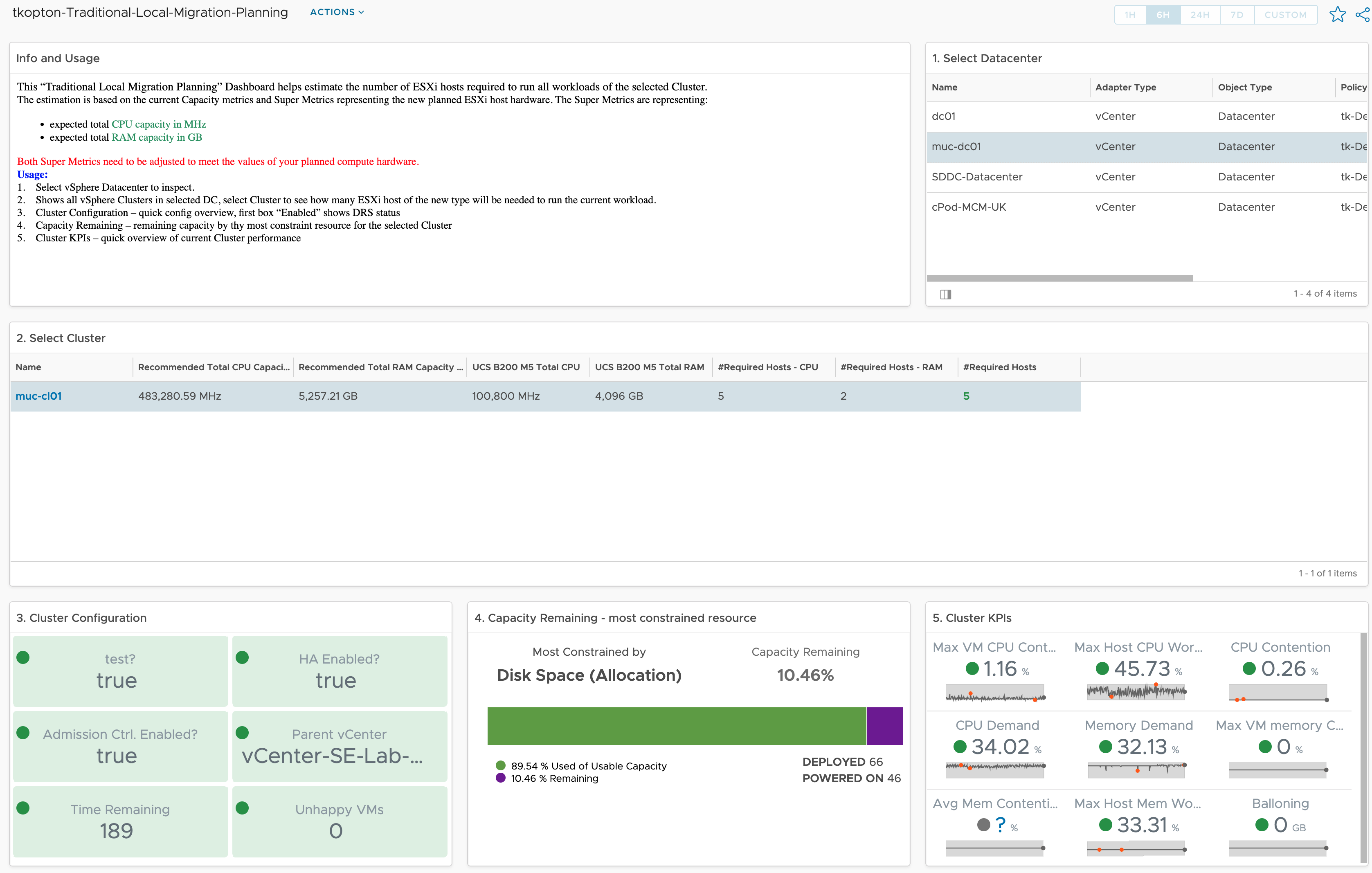

How vRealize Operations helps size new vSphere Clusters

In ESXi Cluster (non-HCI) Rightsizing using vRealize Operations I have described how to use vRealize Operations and the numbers calculated by the Capacity Engine to estimate the number of ESXi hosts which might be moved to other clusters or decommissioned. The corresponding dashboard is available via VMware Code. In this post, I describe the opposite …

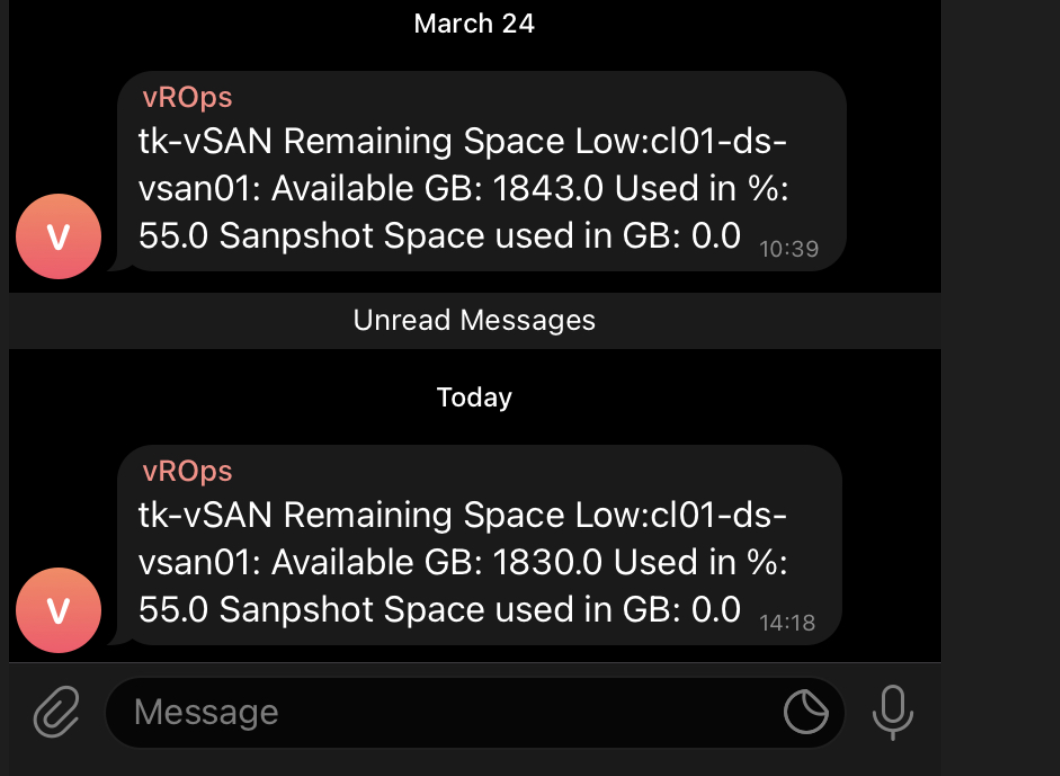

vRealize Operations and Telegram – Alerts on your Phone

As you all know vRealize Operations is a perfect tool to manage and monitor your SDDC and in case of issues vROps creates alerts and informs you as quickly as possible providing many details related to an alert. Without any additional customization, vRealize Operations displays the alerts in the Alerts tab. Sending alerts via email …

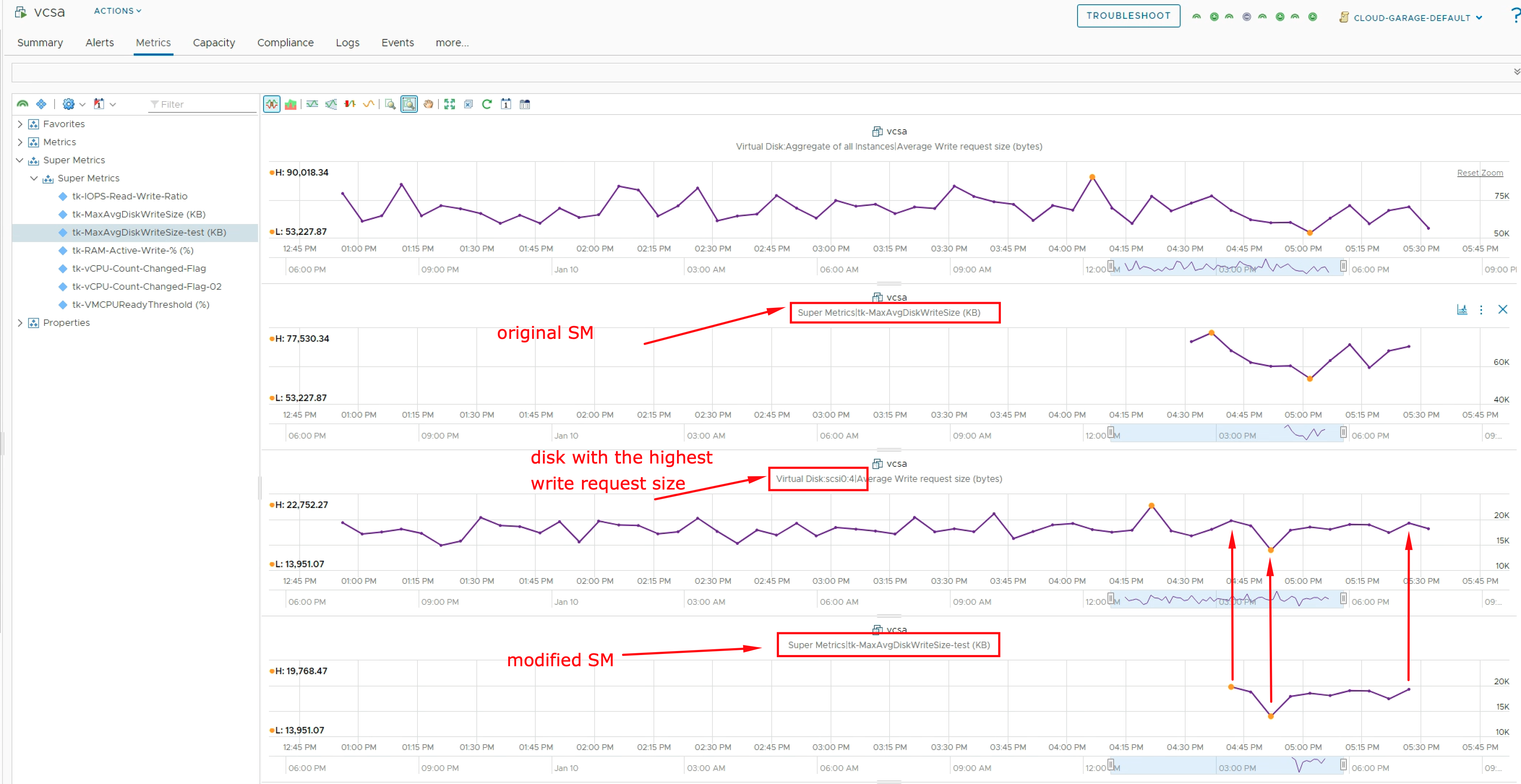

Exclude “Aggregate” Instanced Metric using vRealize Operations Super Metric

As you know vRealize Operations is collecting tons of various metrics. Some of these metrics are so-called “Instanced Metrics” and disabled in the default configuration in newer vROps versions. A list of disabled instanced metrics for e.g. Virtual Machine object type is available here: https://docs.vmware.com/en/vRealize-Operations/8.6/com.vmware.vcom.metrics.doc/GUID-1322F5A4-DA1D-481F-BBEA-99B228E96AF2.html#disabled-instanced-metrics-18 If you need any of those metrics, you can enable …

vCenter Events as vRealize Operations Alerts using vRealize Log Insight

As you probably know vRealize Operations provides several symptom definitions based on message events as part of the vCenter Solution content OOTB. You can see some of them in the next picture. These events are used in alert definitions to raise vReaalize Operations alarms any time one of those events is triggered in any of …

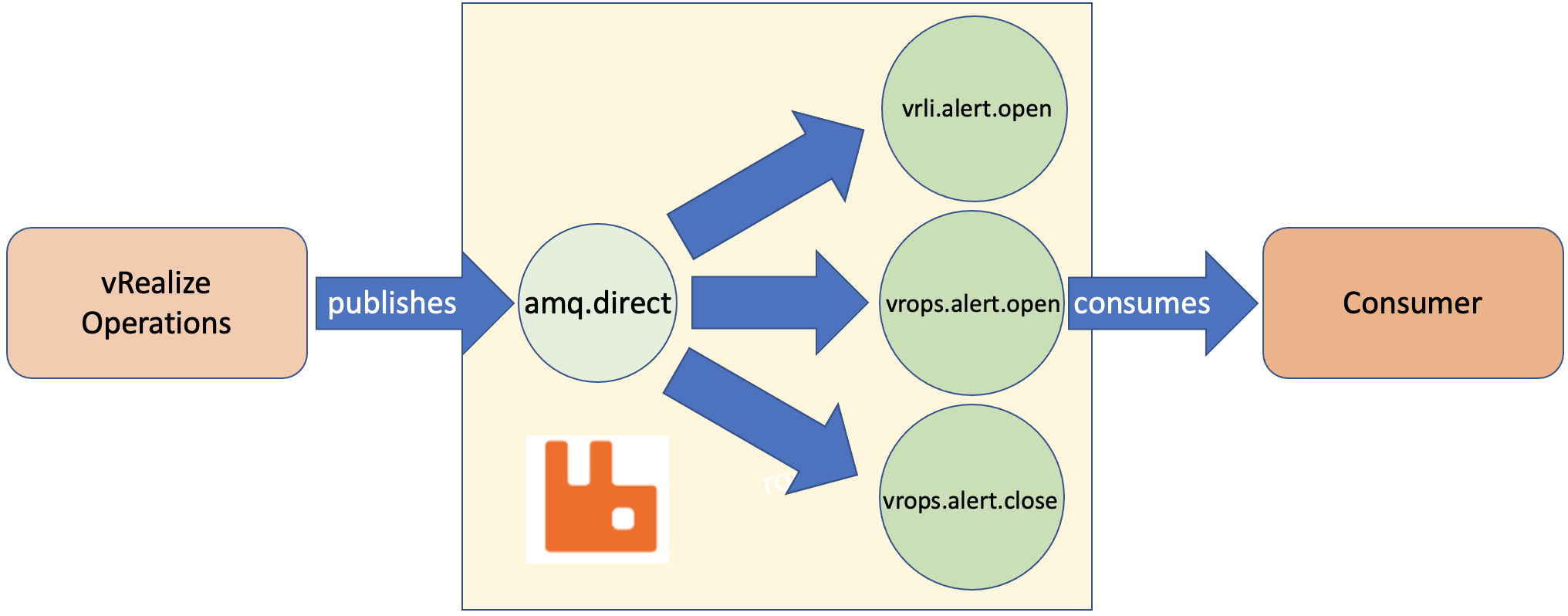

vRealize Operations AMQP Integration using Webhooks

With the version 8.4 vRealize Operations introduced the Webhook Outbound Plugin feature. This new Webhook outbound plugin works without any additional software, the Webhook Shim server becomes obsolet. In this post, I will explain how to integrate vRealize Operations with an AMQP system. For this exercise, I have deployed a RabbitMQ server but the concept …

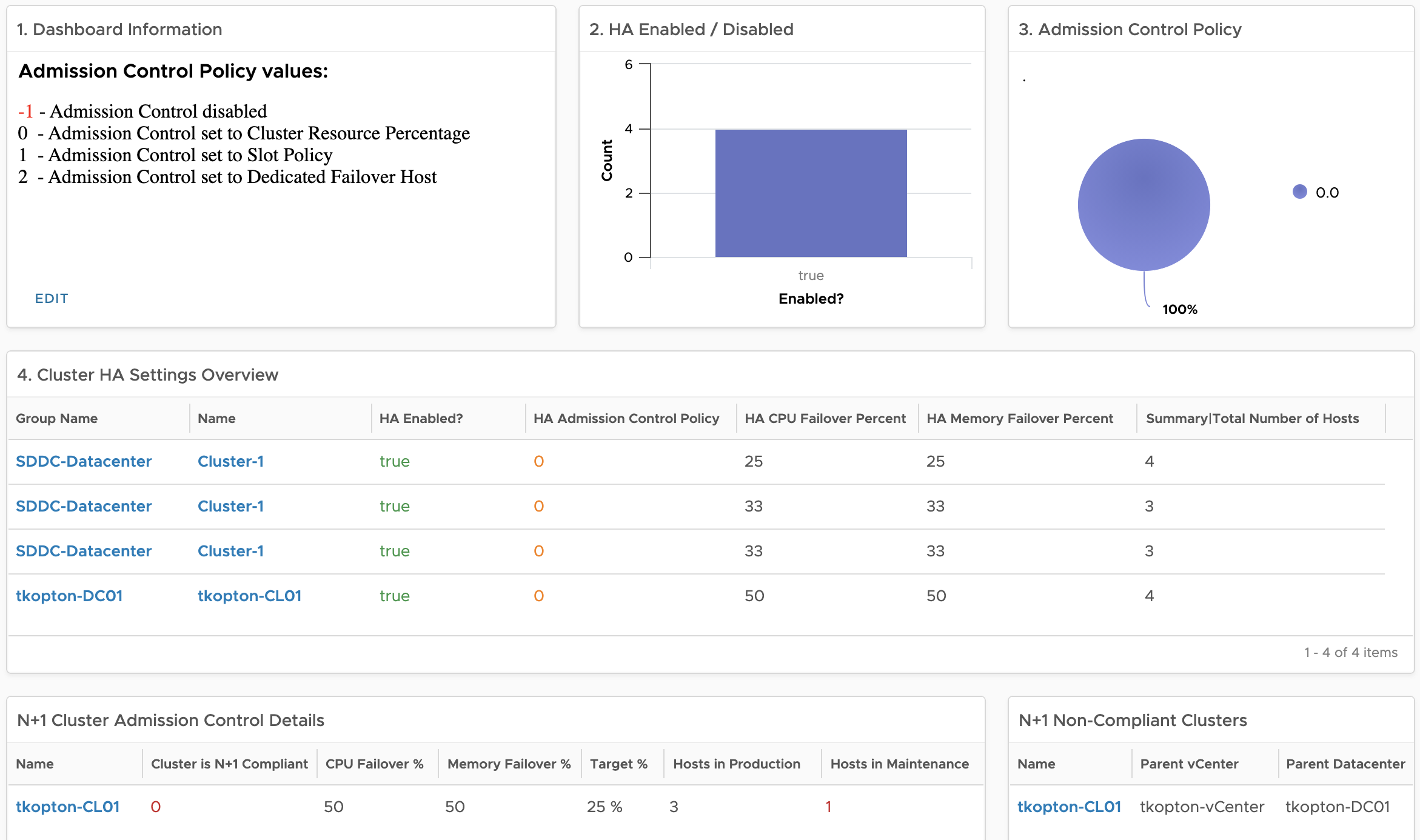

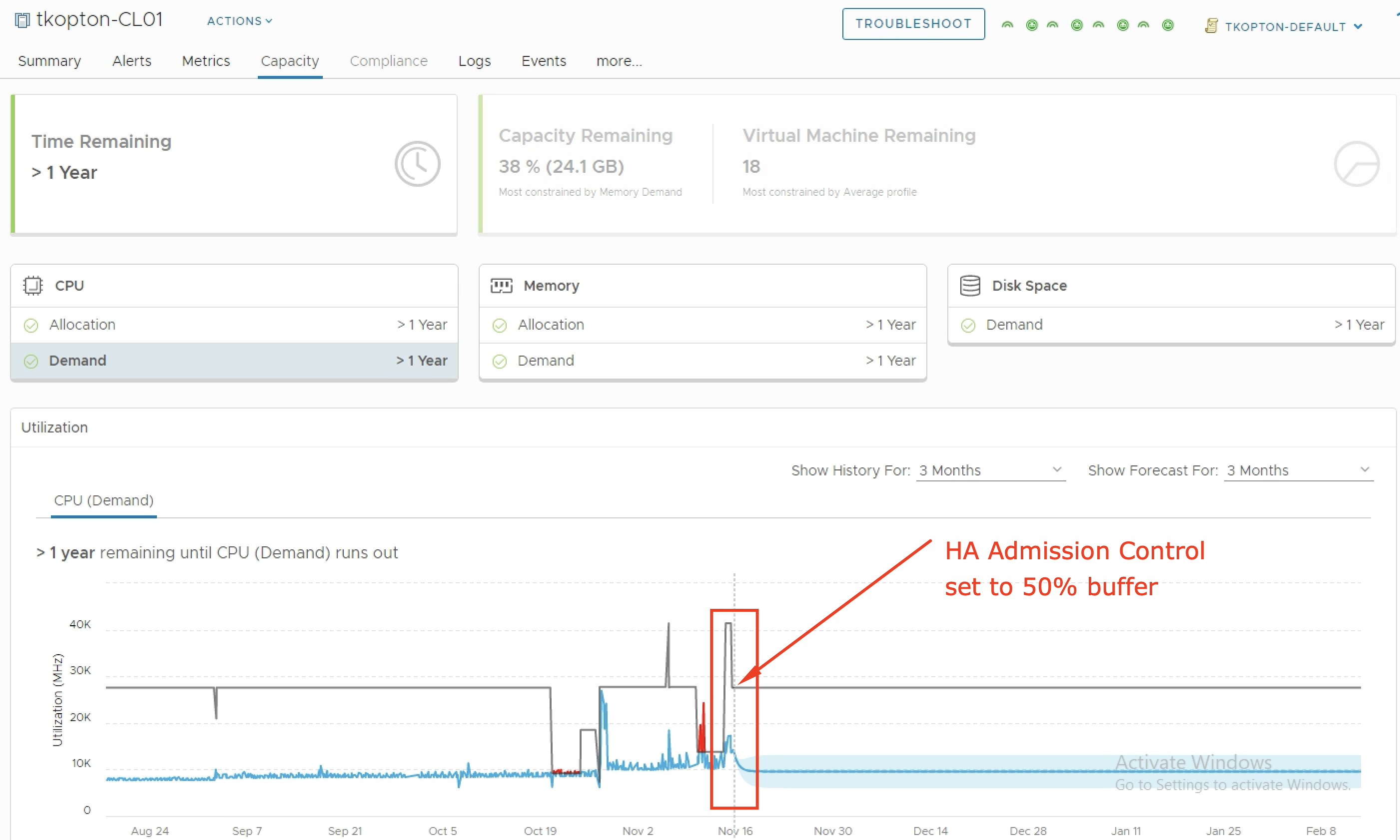

Monitoring vSphere HA and Admission Control Settings using vRealize Operations

vSphere High Availability (vSphere HA) and Admission Control ensure that sufficient resources are reserved for virtual machine recovery when a host fails. Usually, my customers are running their vSphere clusters in either N+1 or N*2 configurations reflected corresponding Admission Control settings In one of my previous blog posts, I have described how vRealize Operations helps with …

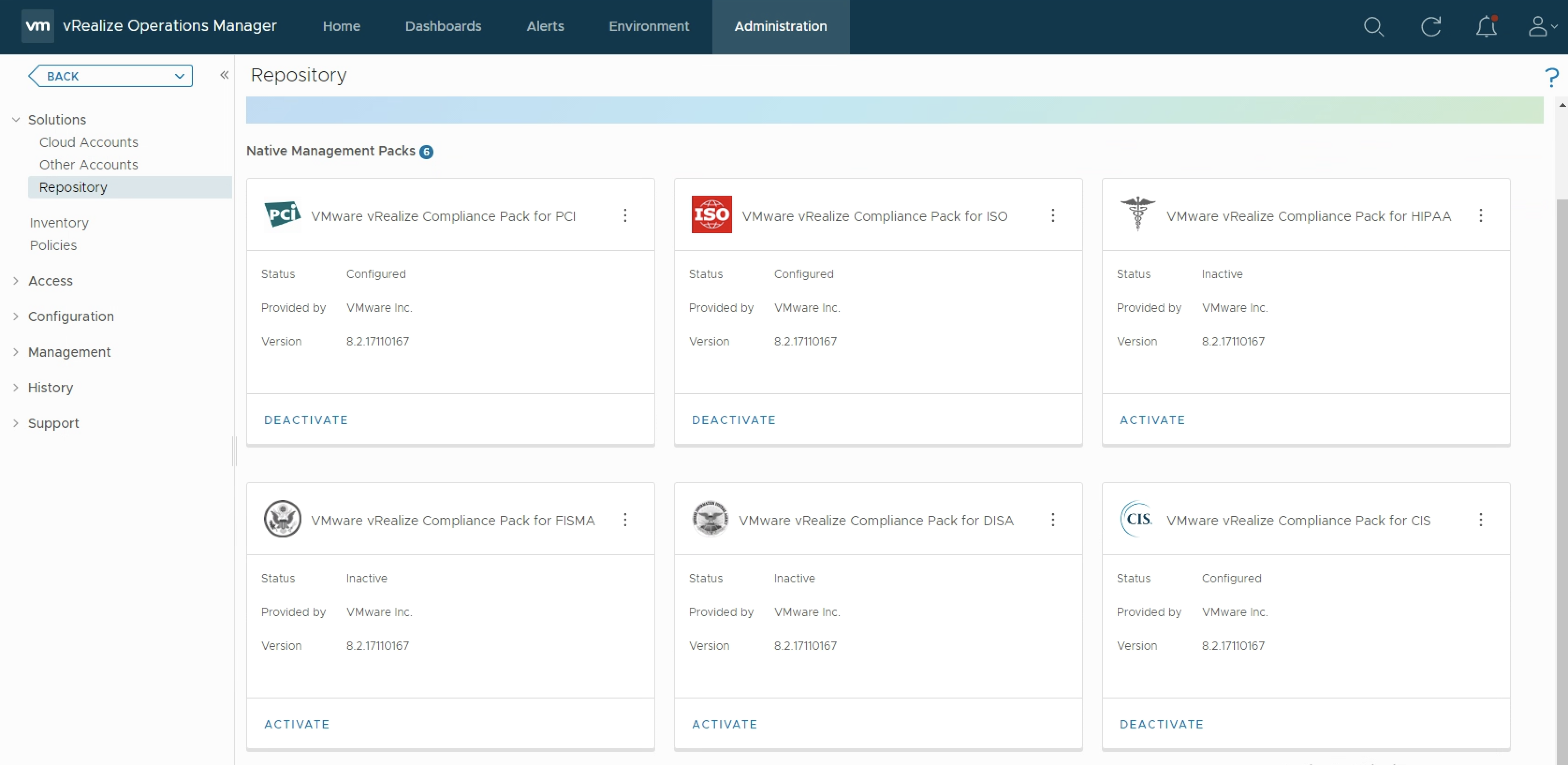

Custom Compliance Management using vRealize Operations

As you probably know vRealize Operations provides several Compliance Packs basically out-of-the-box (“natively”). A simple click on “ACTIVATE” in the “Repository” tab installs all needed components of the Compliance Pack and allows the corresponding regulatory benchmarks to be executed. “Regulatory benchmarks provide solutions for industry standard regulatory compliance requirements to enforce and report on the …

Capacity Management for n+1 and n*2 Clusters using vRealize Operations

When it comes to capacity management in vSphere environments using vRealize Operations customers are frequently asking for guidelines how to setup vROps to properly manage n+1 and n*2 ESXi clusters. Just as a short reminder, n+1 in context of a ESXi cluster means that we are tolerating (and are hopefully prepared for) the failure of …