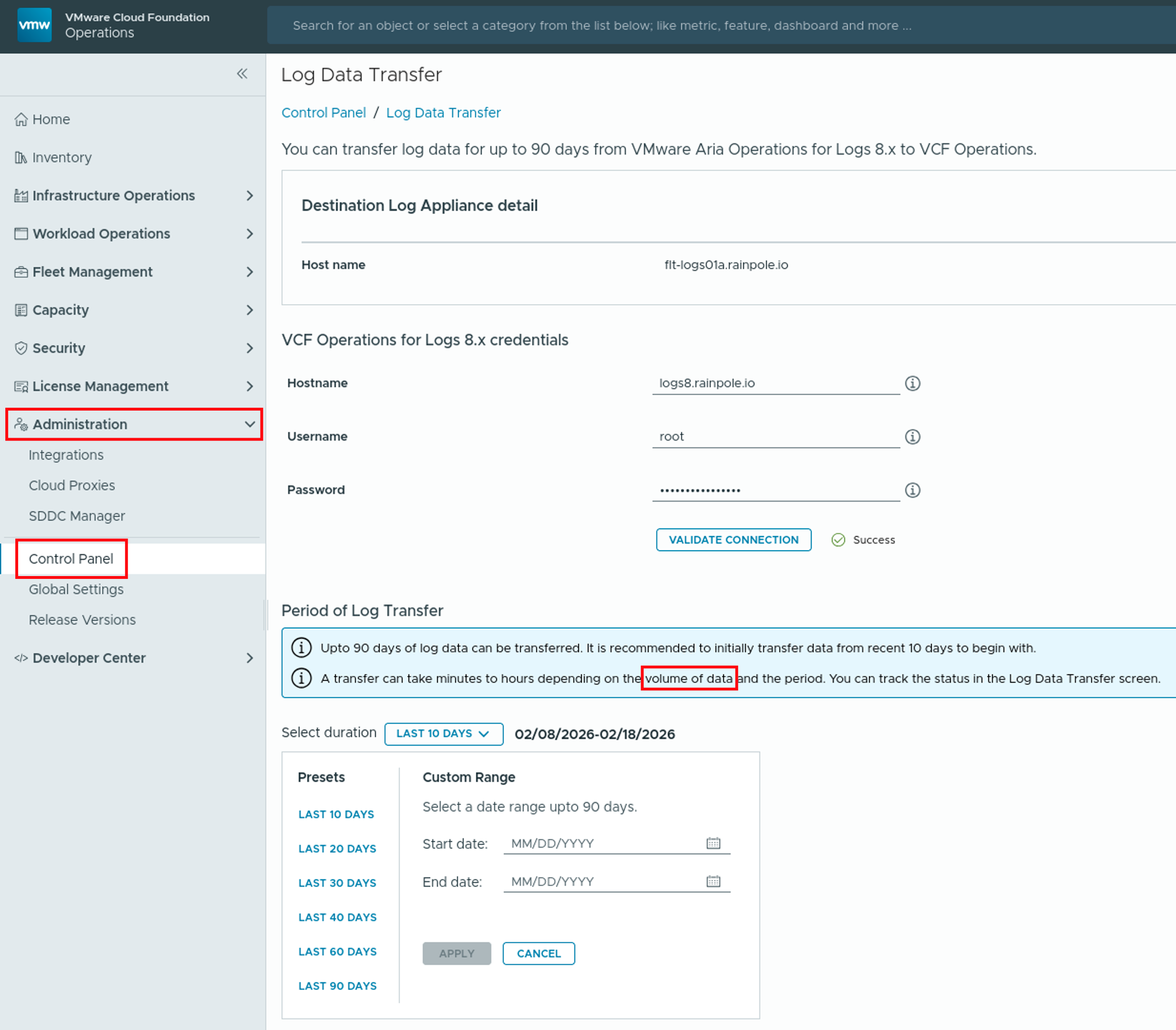

Introduction to Log Data Transfer in VCF Operations 9 With the release of VMware Cloud Foundation (VCF) 9, Logs is now more integrated into Operations. Moving from Aria Operations for Logs 8.18.x to VCF 9 does not support a direct, in-place upgrade, instead, administrators must perform a fresh deployment of the 9.0 appliance. This deployment …

Category: vRealize Log Insight

Migrating Content and Config from Aria Operations for Logs 8.18 to VCF Operations for Logs 9

Introduction As organizations modernize their Private Clouds with VMware Cloud Foundation 9, the logging infrastructure undergoes a significant evolution. Transitioning from VMware Aria Operations for Logs 8.18.x (formerly vRealize Log Insight) to the new VCF Operations for Logs 9 is designed as a side-by-side migration requiring a fresh deployment. While there is a fully supported …

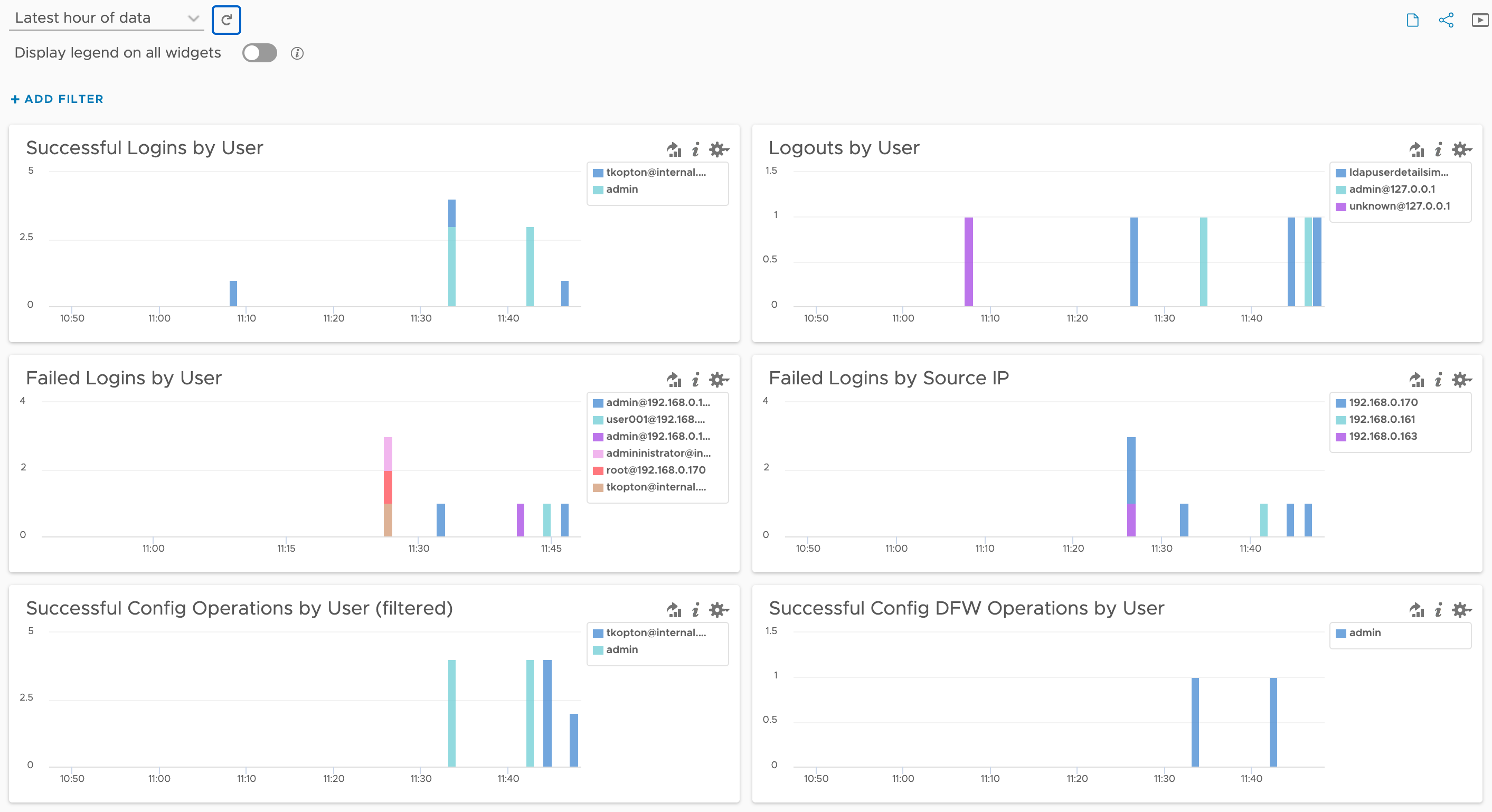

NSX User Ops Audit using Aria Operations for Logs

Recently, a customer asked me if it’s possible to monitor or retrospectively see which user performed specific actions in NSX using the tools available in the VMware Cloud Foundation (VCF) stack — essentially, a typical user actions audit for NSX. Although we don’t have an exact match in the current Aria Operations for Logs NSX …

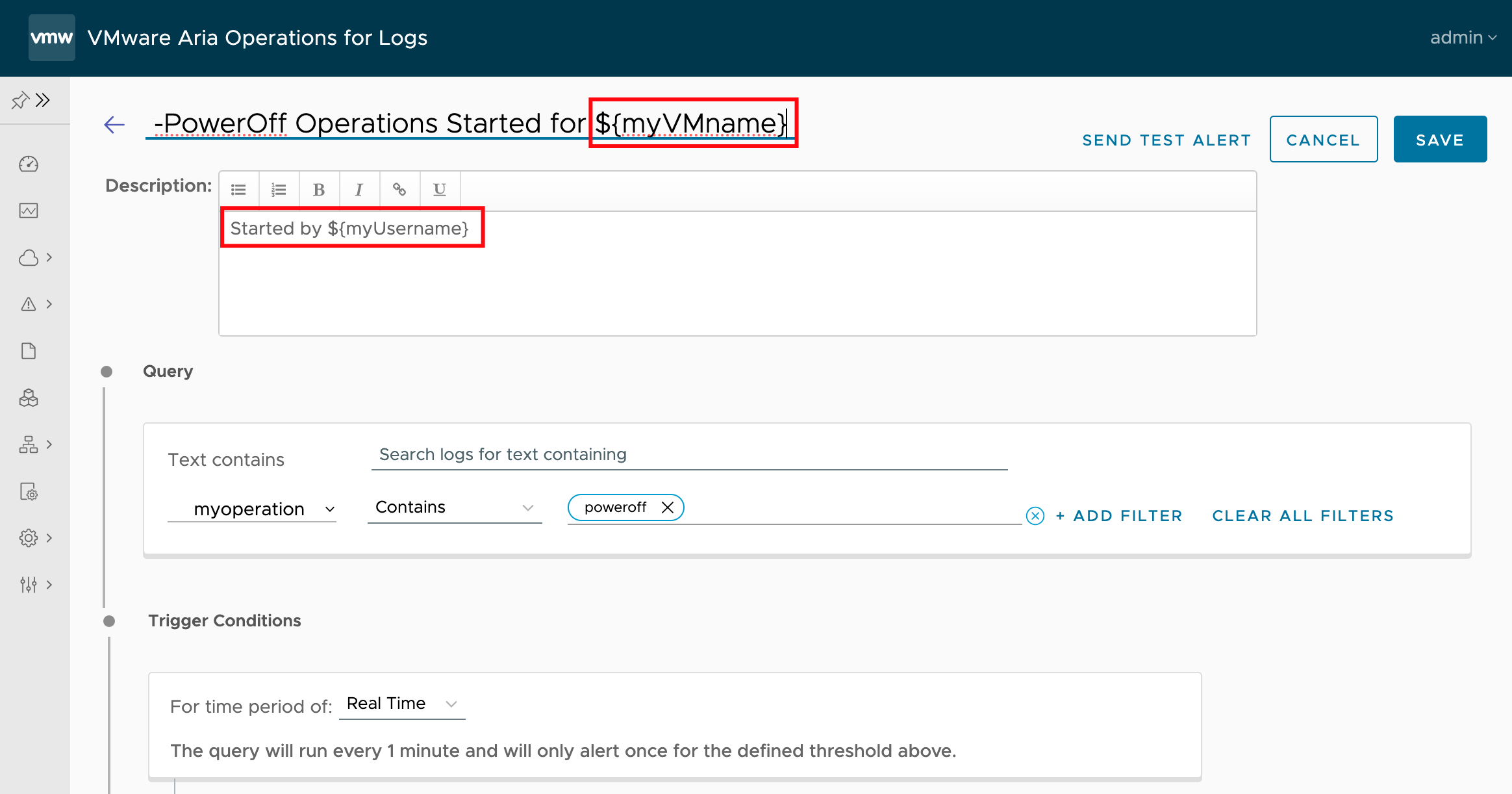

Aria Operations for Logs – Fields as Part of Alert Title – Quick Tip

As described in the official VMware Aria Operations for Logs (formerly know as vRealize Log Insight) documentation, you can customize alert names by including a field in the format ${field name}. For example in the following alert definition which will be triggered by VM migration operations the title will contain the name of the user who …

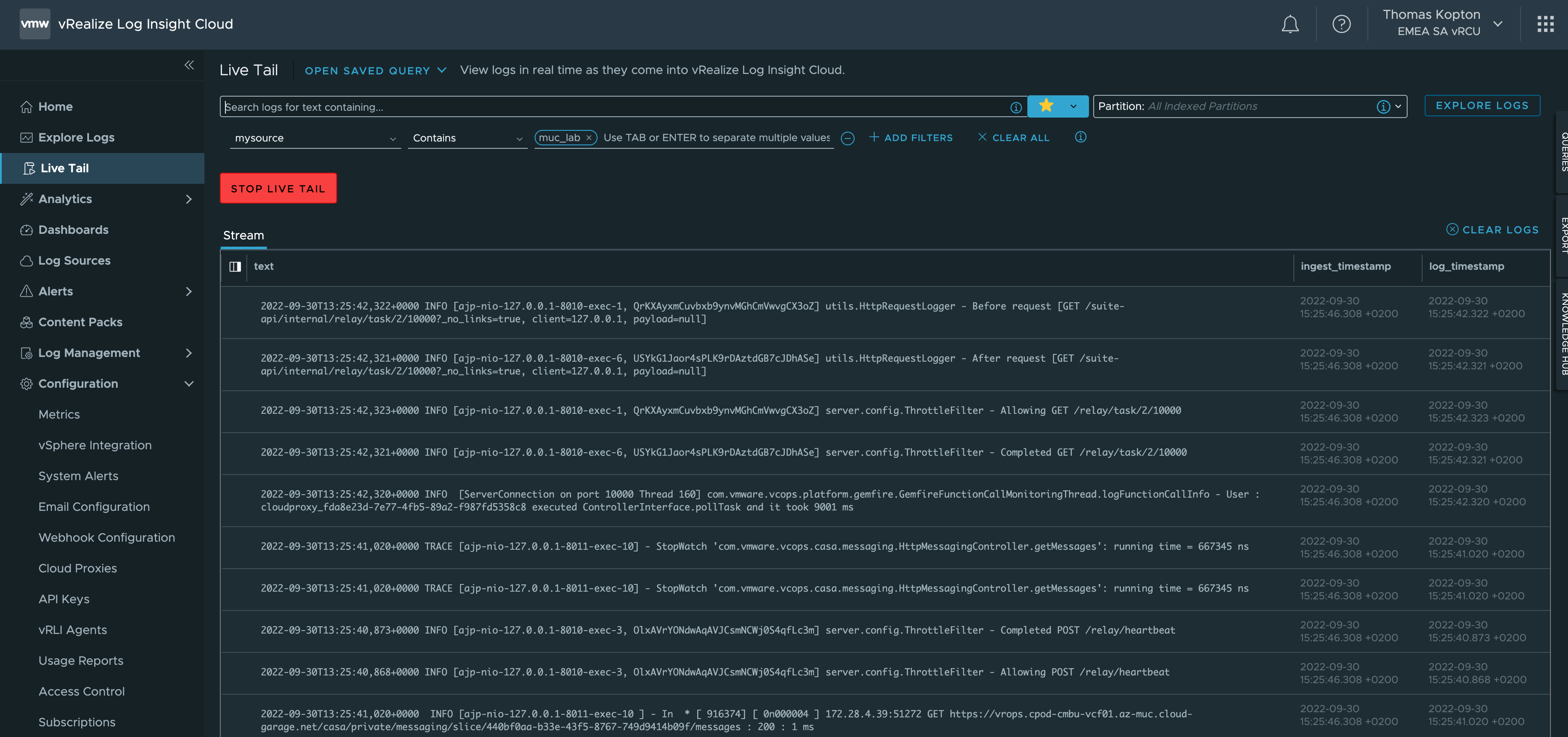

vRealize Log Insight and Direct Forwarding to Log Insight Cloud

Since the release of vRealize Log Insight 8.8, you can configure log forwarding from vRealize Log Insight to vRealize Log Insight Cloud without the need to deploy any additional Cloud Proxy. It is the Cloud Forwarding feature in Log Management, which makes it very easy to forward all or only selected log messages from your …

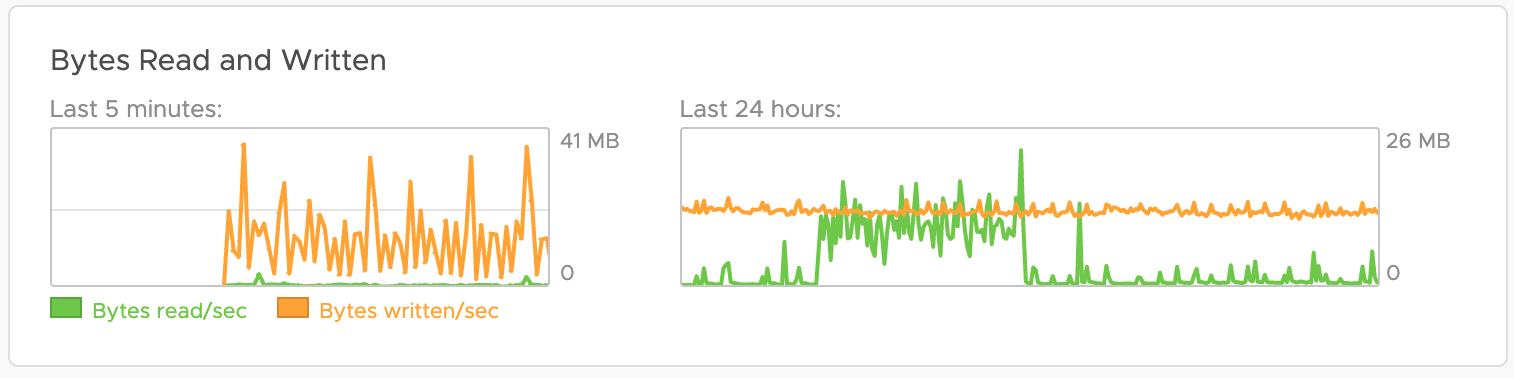

vRealize Log Insight Daily Disk Consumption – Quick Tip

Recently I was asked how to quickly estimate the vRealize Log Insight (vRLI) data usage per day and I have checked several, more or less obvious, approaches. Usually, this question comes up when a customer is either re-designing the vRLI cluster or migrating to the vRealize Log Insight Cloud instance. To answer that question we …

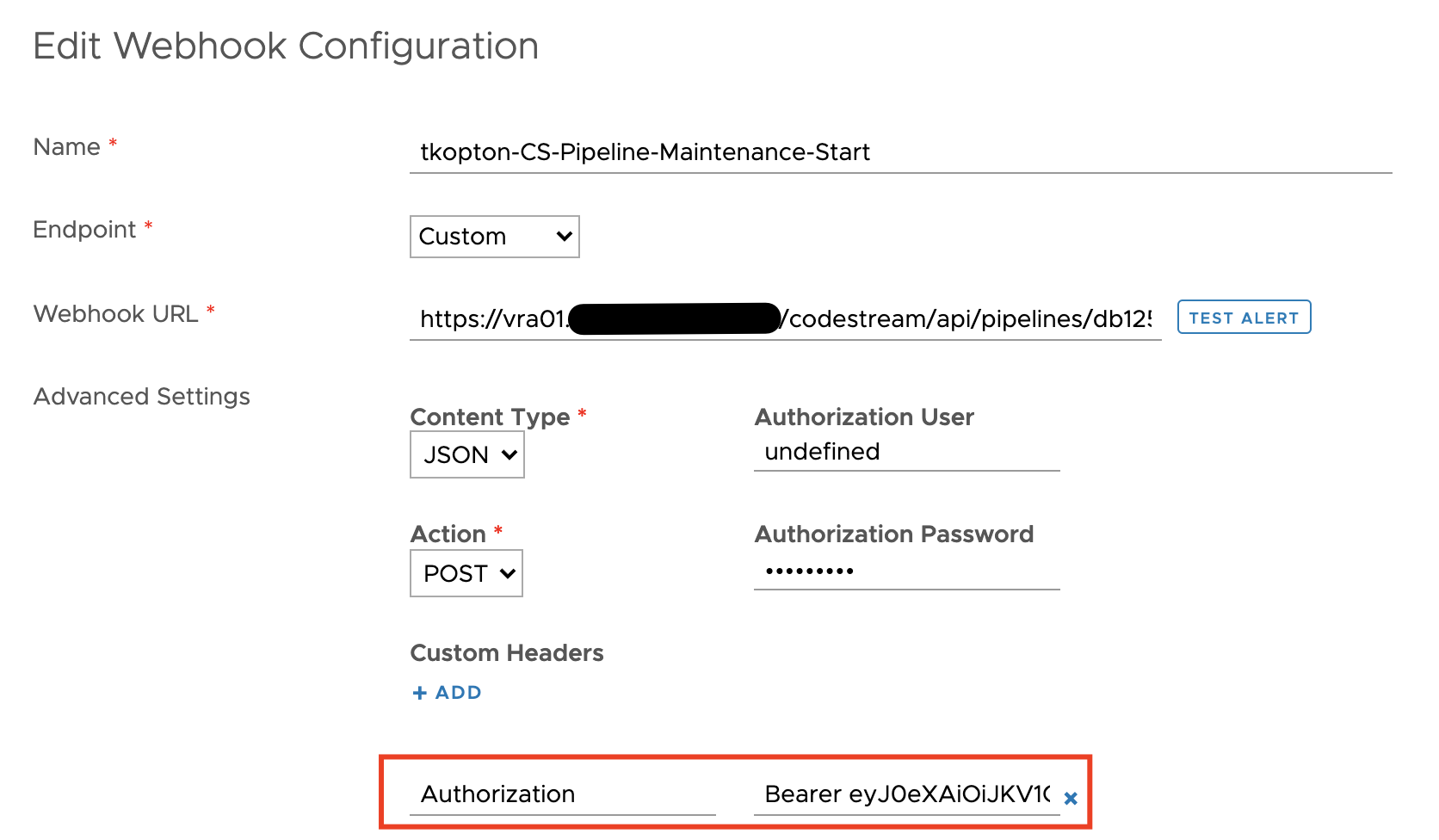

Quick Tip – Programmatically Update vRealize Log Insight Webhook Token

The Webhook feature in vRealize Log Insight is a great way to execute automation tasks, push elements in a RabbitMQ message queue or start any other REST operation. Many endpoints providing such REST methods require a token-based authentication, like the well-known Bearer Token. vRealize Automation is one example of such endpoints. It is pretty easy …

vCenter Events as vRealize Operations Alerts using vRealize Log Insight

As you probably know vRealize Operations provides several symptom definitions based on message events as part of the vCenter Solution content OOTB. You can see some of them in the next picture. These events are used in alert definitions to raise vReaalize Operations alarms any time one of those events is triggered in any of …

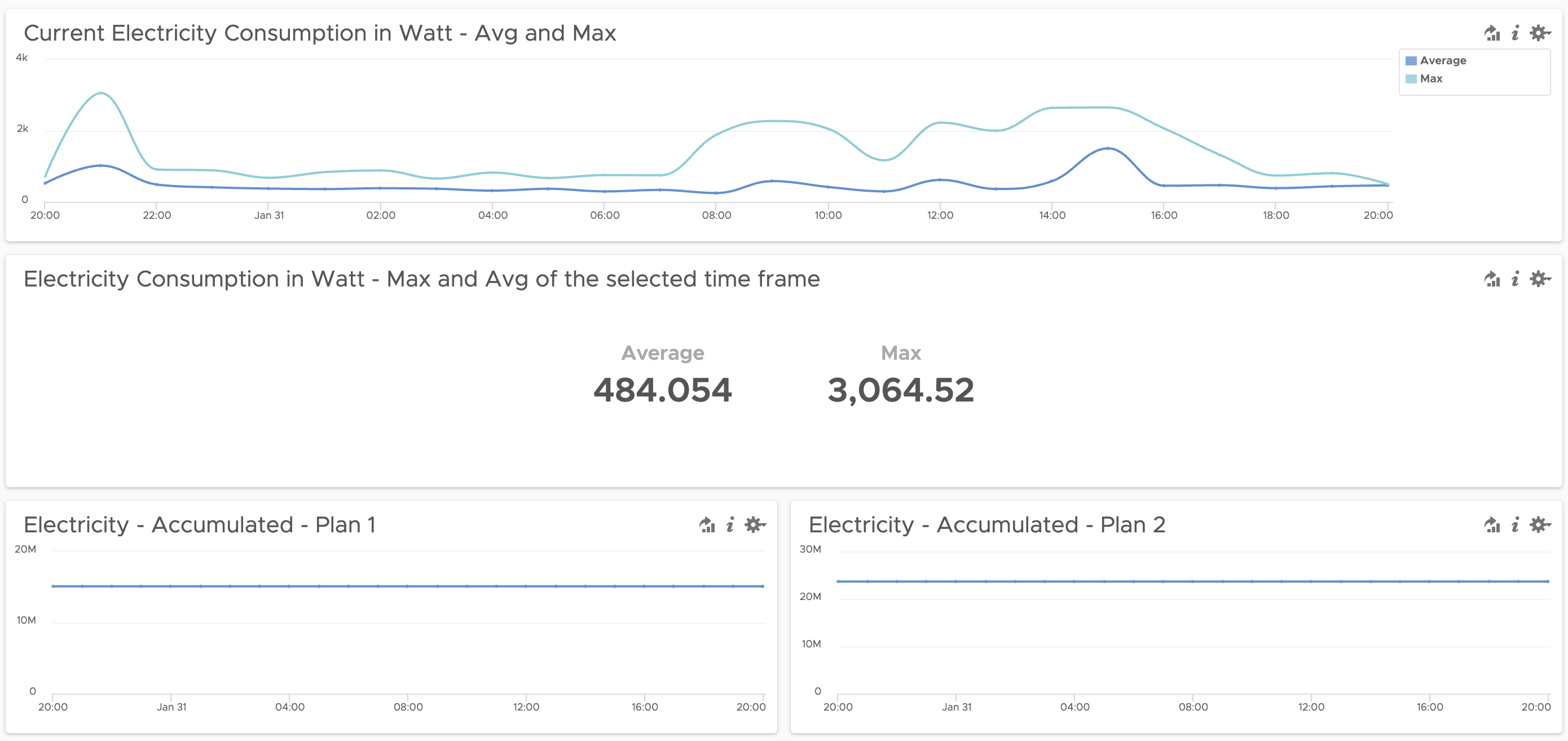

Energy Consumption Monitoring using SML Data and vRealize Log Insight

It all started with my last electricity bill. Shortly after I have recovered from the shock and made sure that I really do not have any aluminum smelter running in my basement, I decided, I need some kind of monitoring of my electric energy consumption. Insights into data is the first and probably most important step …

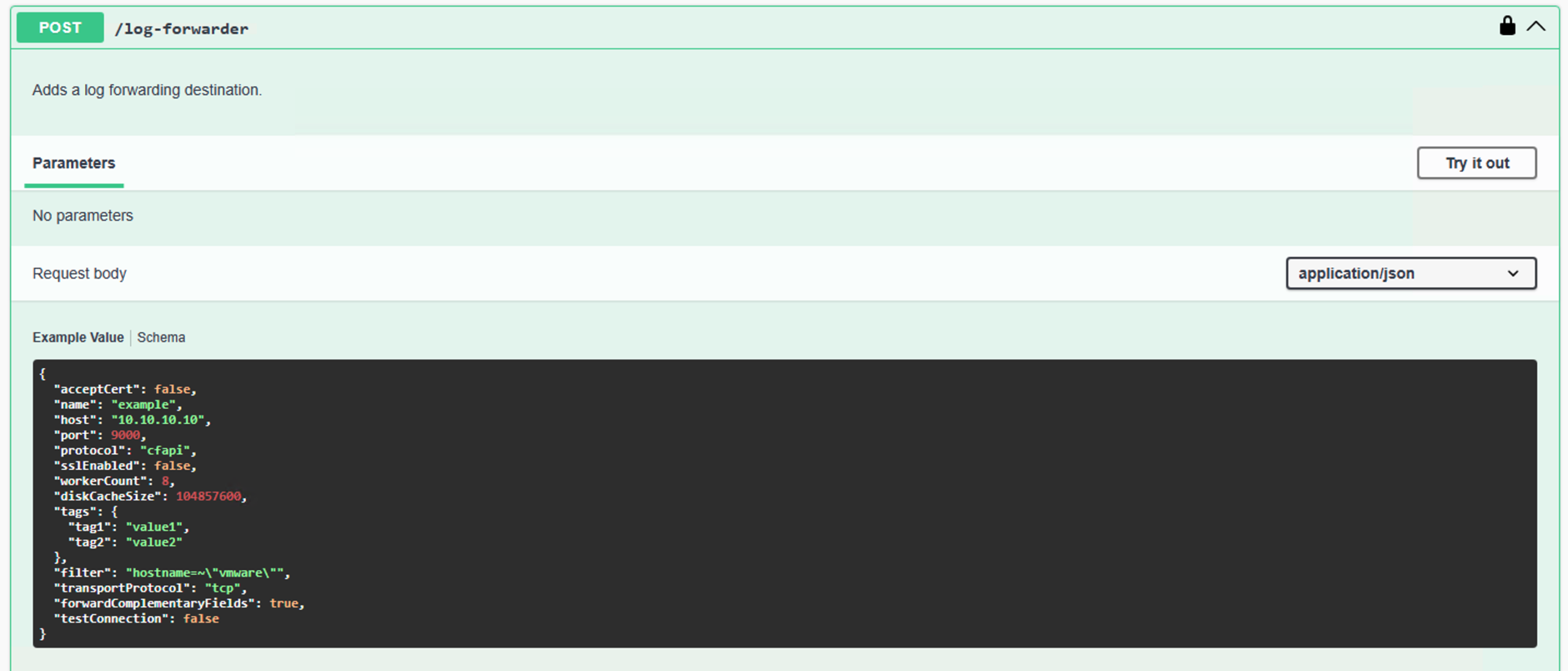

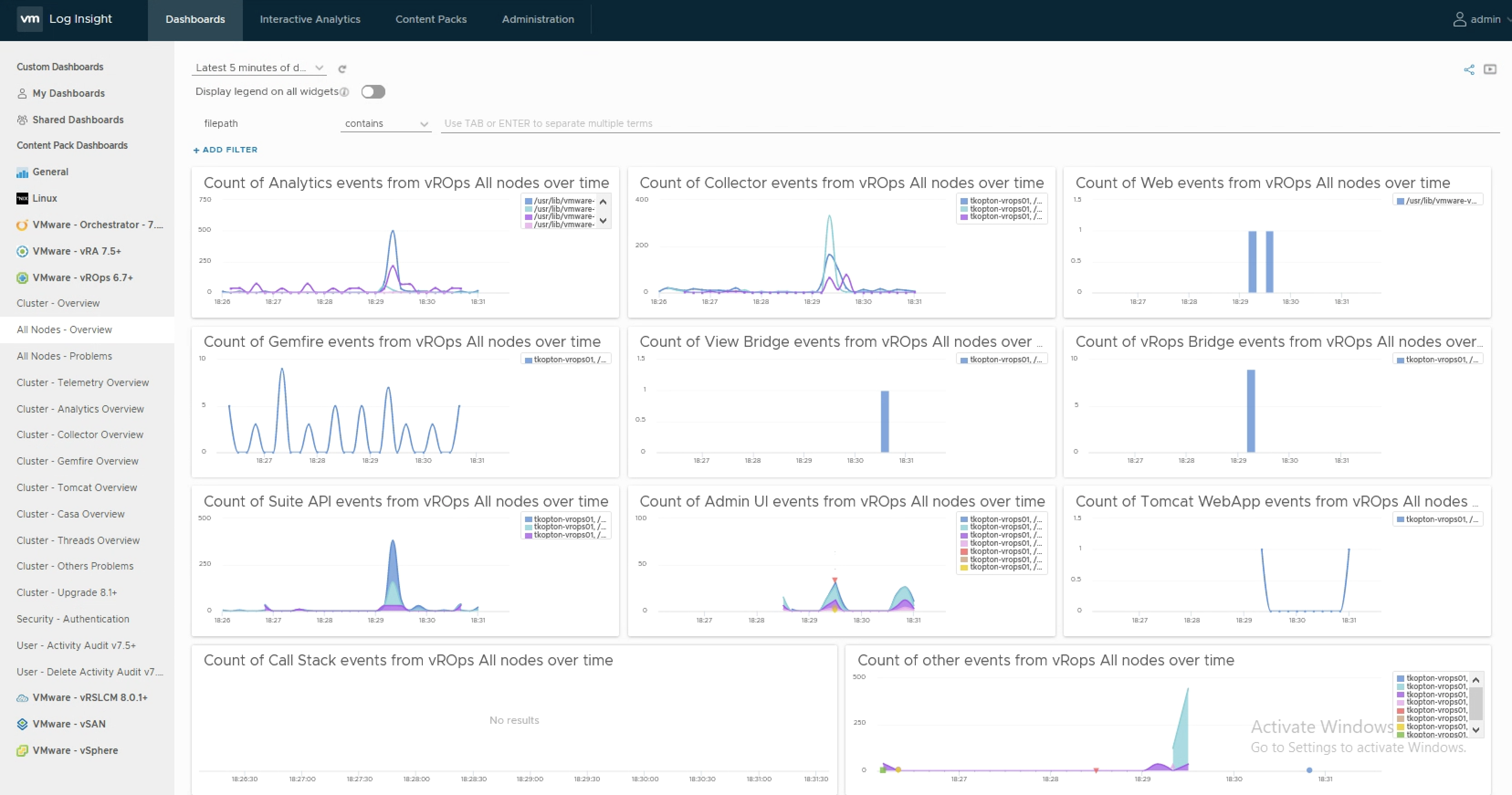

vRealize Operations and Logging via CFAPI and Syslog

Without any doubt configuring vRealize Operations to send log messages to a vRealize Log Insight instance is the best way to collect, parse and display structured and structured log information. In this post I will explain the major differences between CFAPI and Syslog as the protocol used to forward log messages to a log server …