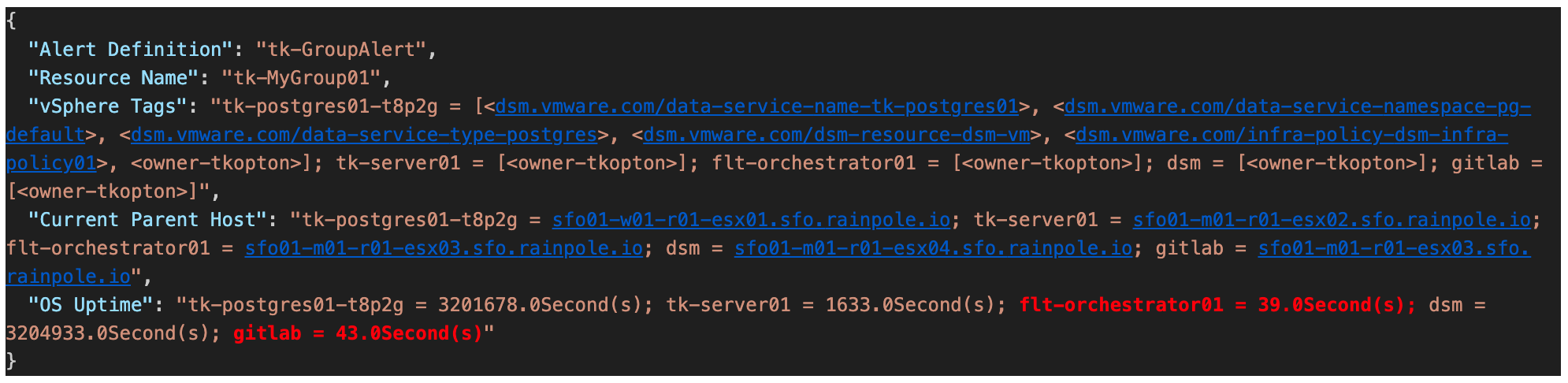

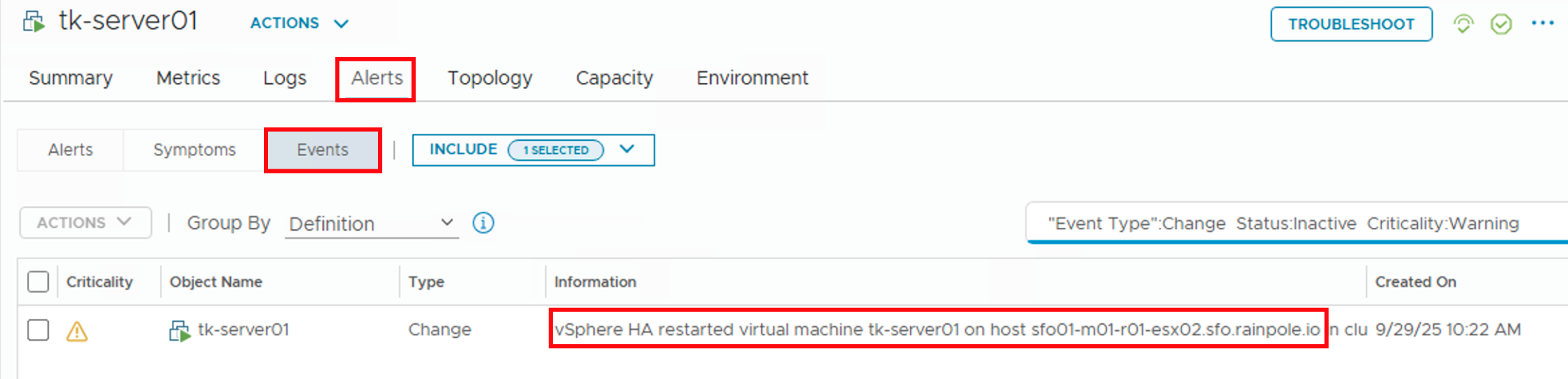

In my previous post (https://thomas-kopton.de/vblog/?p=2198), I detailed how to track HA-induced Virtual Machine restarts in VCF Operations, including the required symptoms, alert definitions, and REST Webhook notifications. However, in larger setups, with potentially over 20 VMs per ESX host, receiving 20 individual notifications is impractical. This simply creates alert fatigue and undermines the entire alerting …

Category: vROps

Monitoring HA VM Restarts in VCF Operations

Someone recently asked me if there’s a straightforward way to notify Virtual Machine owners as soon as their VMs were affected by an HA Event. Apparently, suitable alarm definitions are not available out-of-the-box in VCF Operations, and the customer asked for a solution. Scenario The scenario is therefore quite simple: First Approach Initially, I considered …

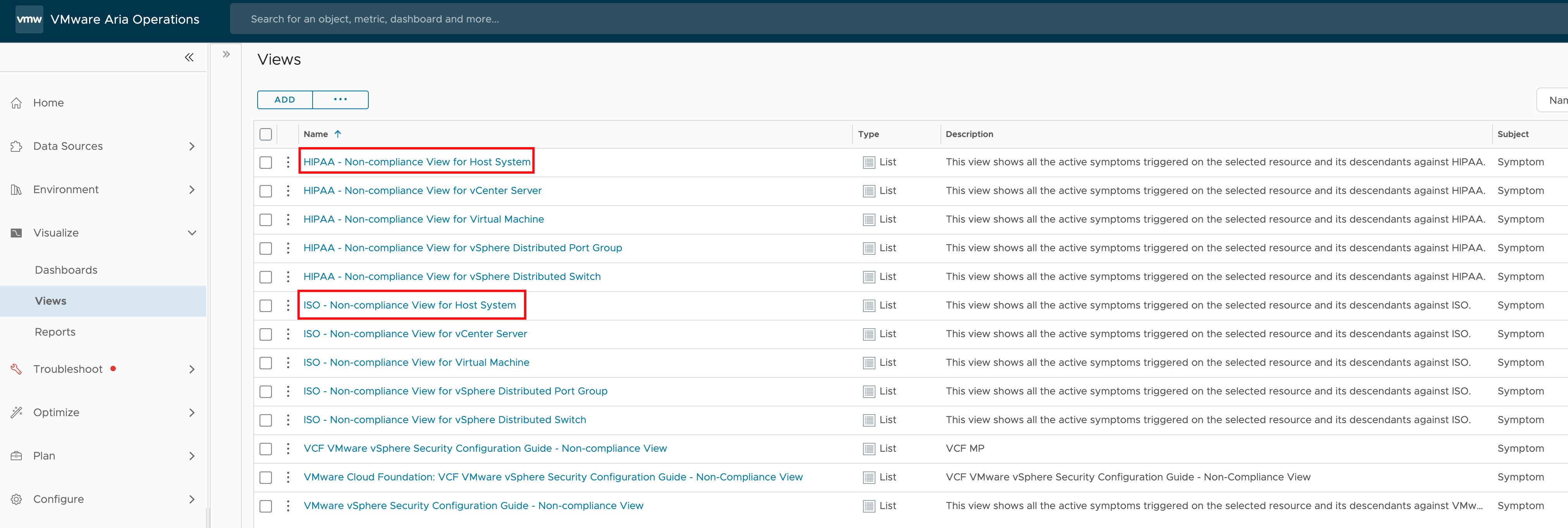

Programmatically Accessing VMware Aria Operations Compliance Check Results

Recently, I was asked if there’s a way to programmatically read the results of a compliance check for specific ESXi hosts in VMware Aria Operations. The use case here is that the customer wants to utilize these compliance results in an automated workflow. This is an interesting question that highlights the growing need for automation …

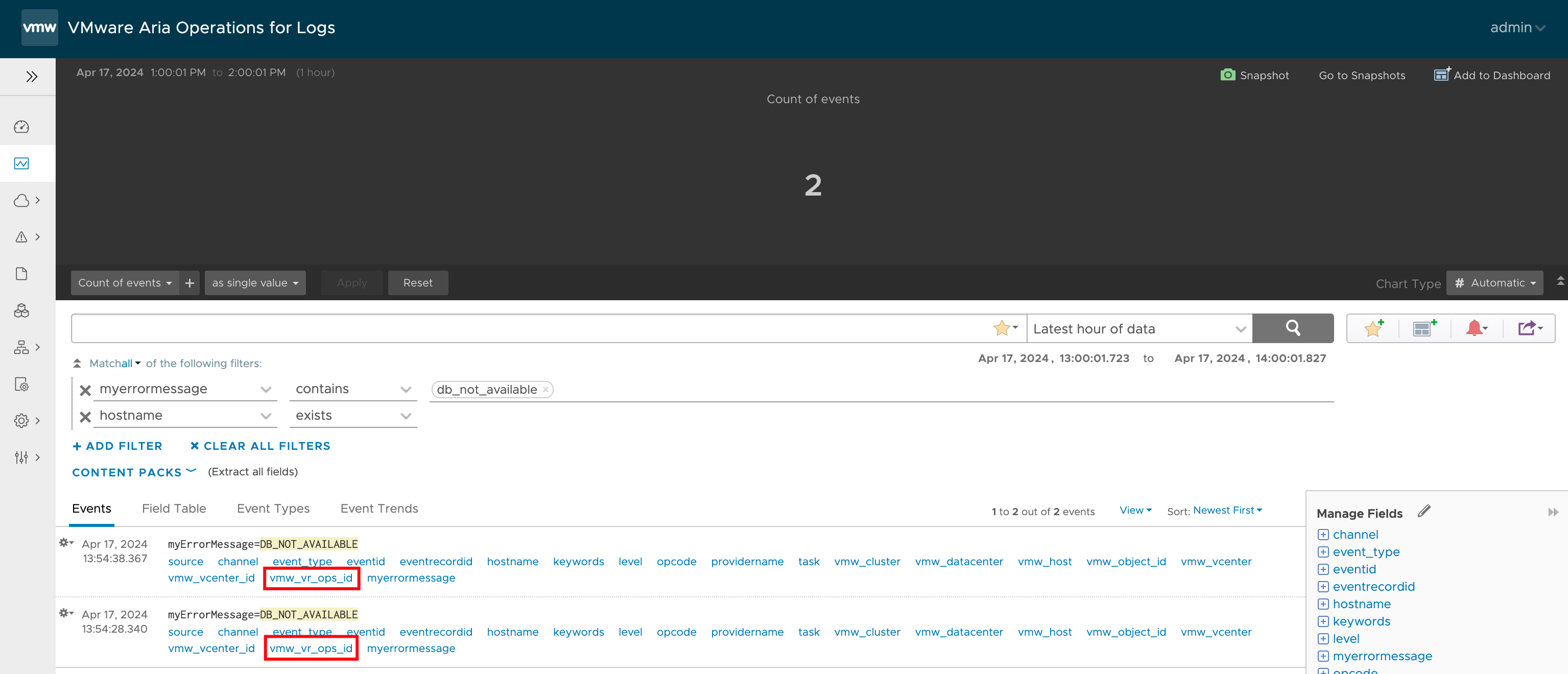

VMware Aria Operations for Logs Alerts as Symptoms in Aria Operations

As is likely known to everyone, the integration between VMware Aria Operations and Aria Operations for Logs involves forwarding alarms generated in Aria Operations for Logs to Aria Operations. If the integration has also established the link between the origin of the log message that triggered the alarm and the object in Aria Operations, the …

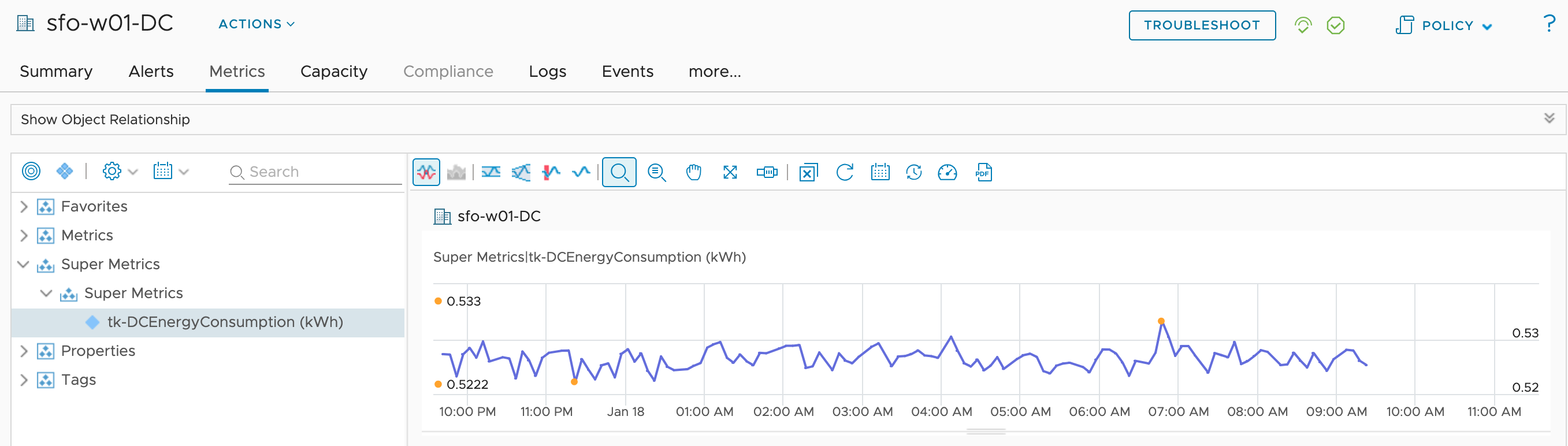

Energy Data at Datacenter Level using VMware Aria Operations

As you all probably know, VMware Aria Operations is providing several energy consumption and sustainability related data at different levels. From the power usage of a single Virtual Machine up to the aggregated data at the vSphere Cluster (Cluster Compute Resource) level. What we are missing, at least as of today, are similar aggregated metrics …

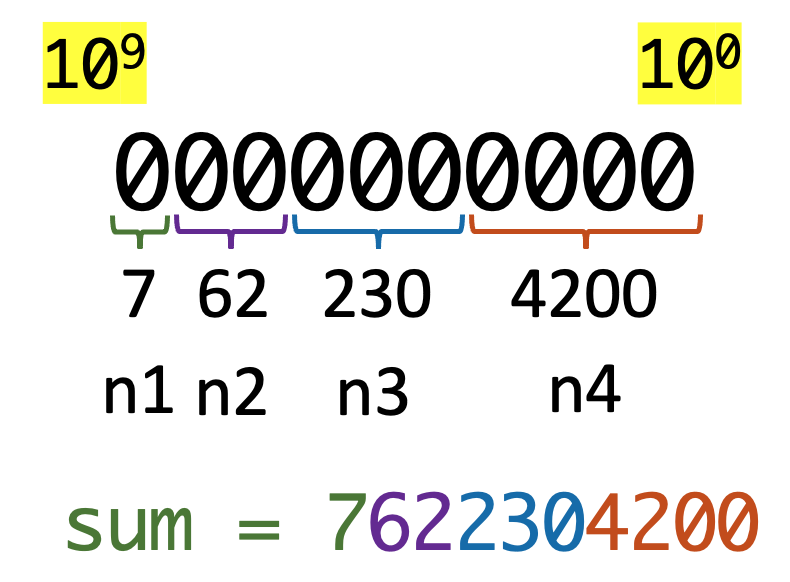

Multiple Metrics with Aria Operations Telegraf Custom Scripts

When using VMware Aria Operations, integrating telegraf can significantly enhance your monitoring capabilities, provide more extensive and customizable data collection, and help ensure the performance, availability, and efficiency of your infrastructure and applications. Utilizing the telegraf agent you can run custom scripts in the end point VM and collect custom data which can then be …

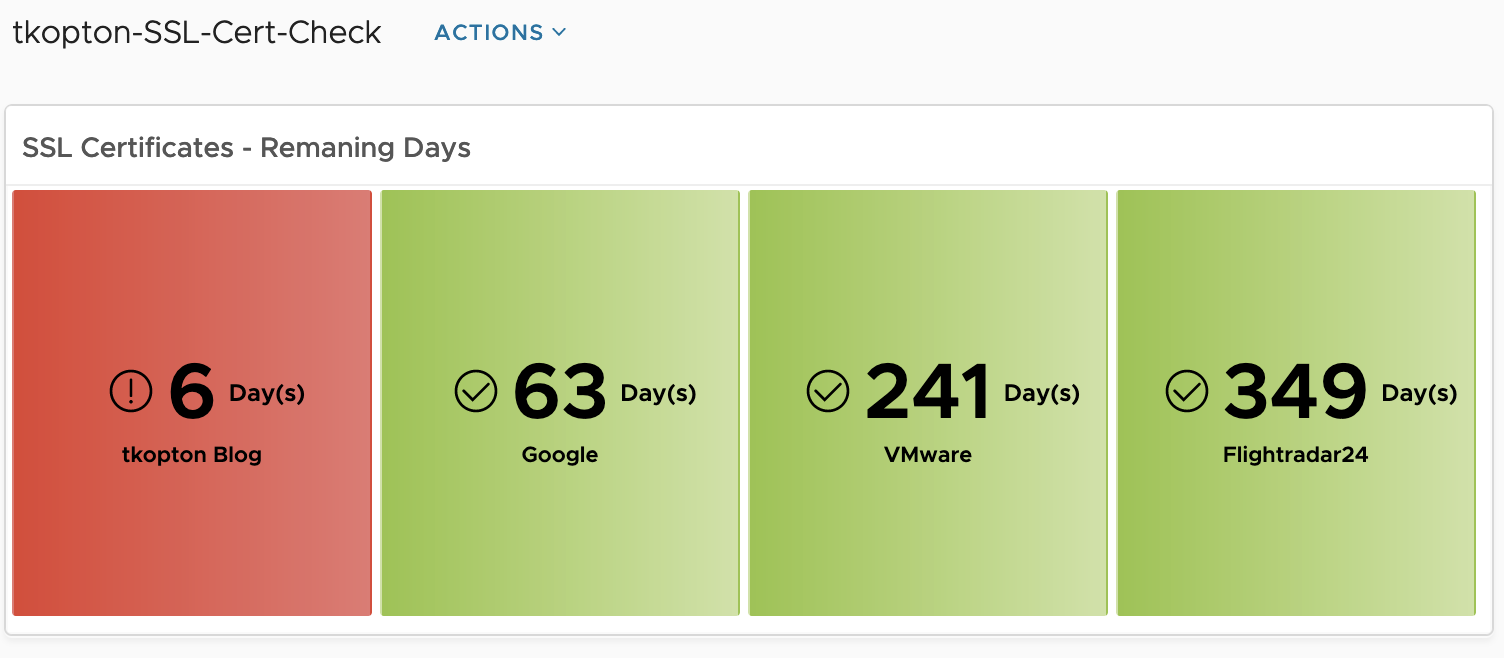

Checking SSL/TLS Certificates using Aria Operations – Update

In my article “Checking SSL/TLS Certificate Validity Period using vRealize Operations Application Monitoring Agents” published in 2020, I have described how to check the remaining validity of SSL/TLS certificates using Aria Operations, or to be more specific, using vRealize Operations 8.1 and 8.2 back in the days. I did not expect this post to be …

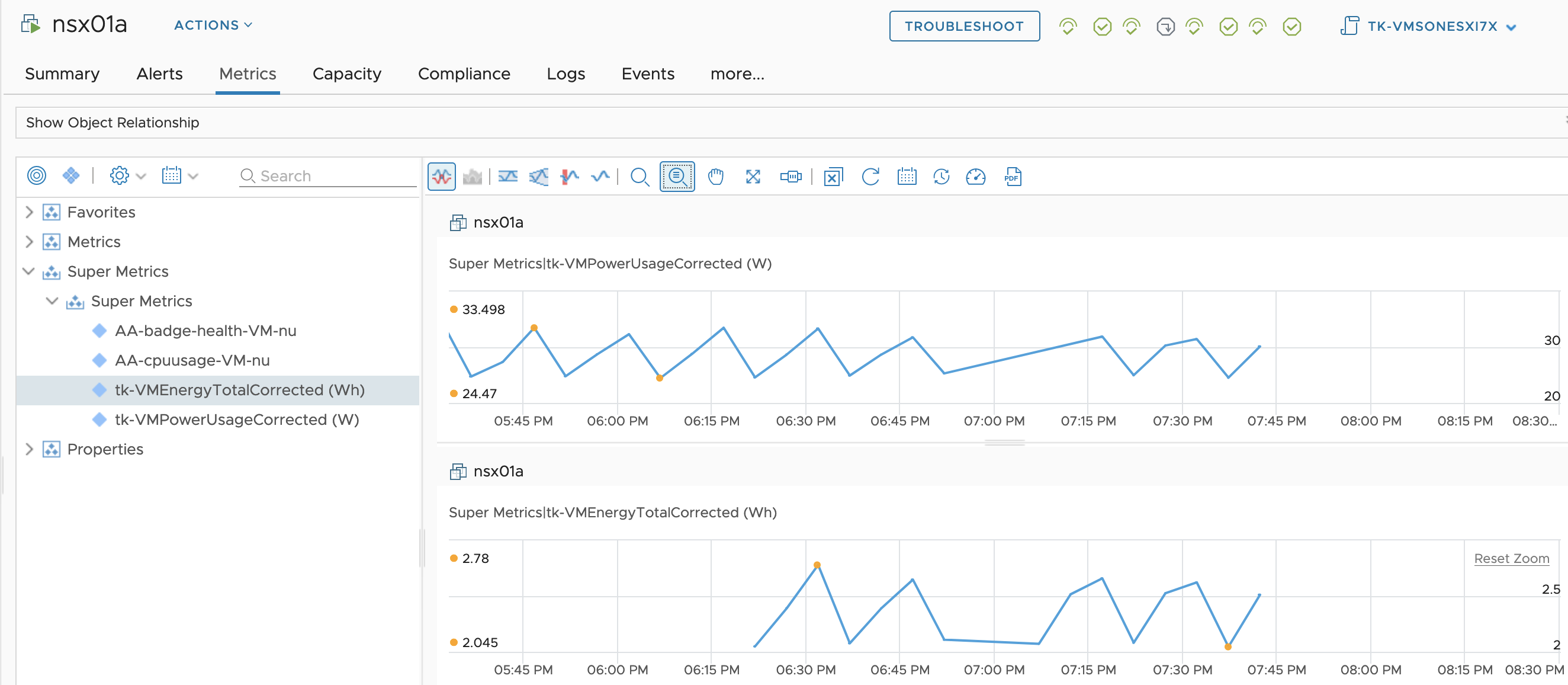

Fixing “Virtual Machine Power Metrics Display in mW” using Aria Operations

In the VMware Aria Operations 8.6 (previously known as vRealize Operations), VMware introduced pioneering sustainability dashboards designed to display the amount of carbon emissions conserved through compute virtualization. Additionally, these dashboards offer insights into reducing the carbon footprint by identifying and optimizing idle workloads. This progress was taken even further with the introduction of Sustainability …

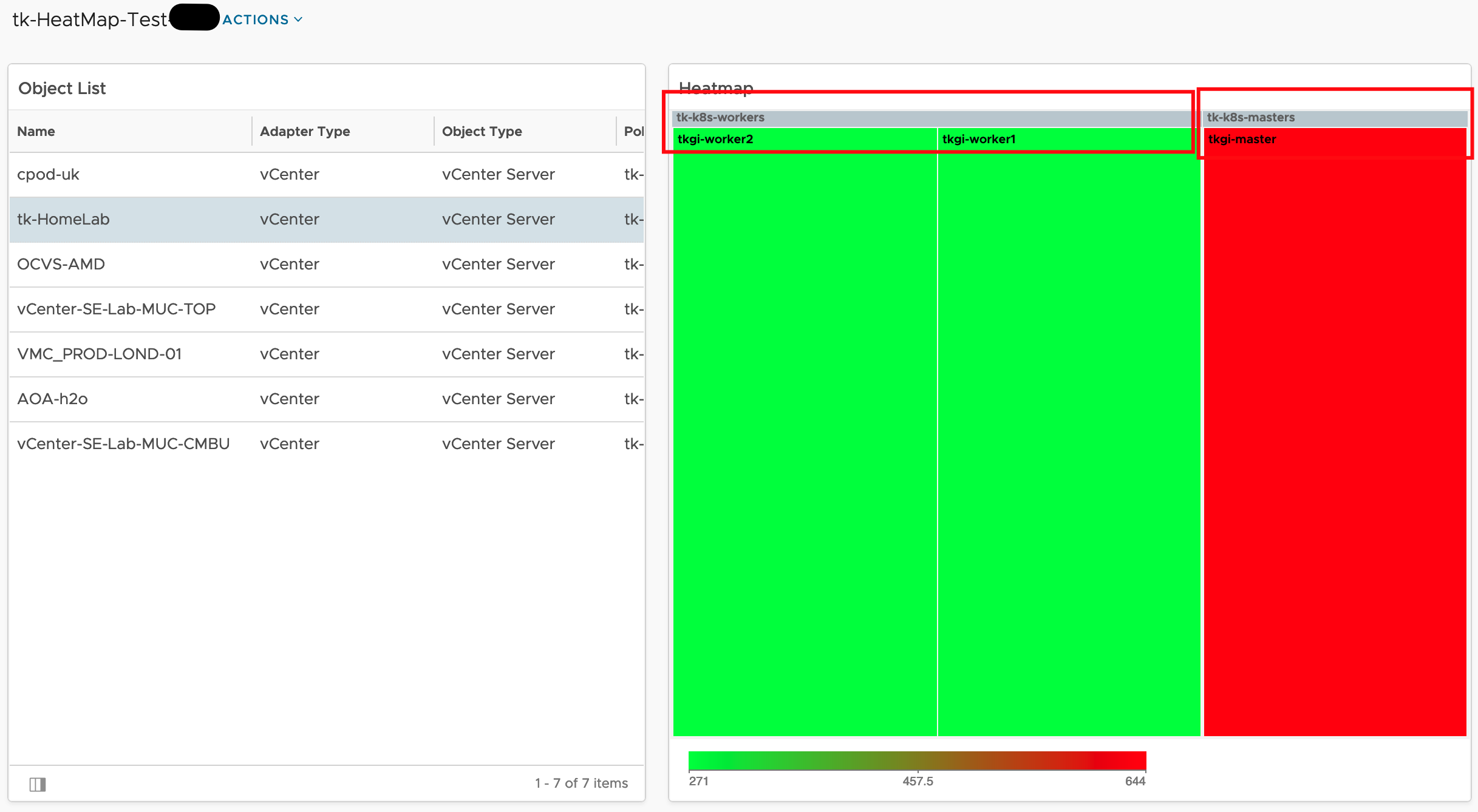

Aria Operations Heatmap Widget meets Custom Groups – Grouping by Property

The Aria Operations Heatmap Widget which is very often used in many Dashboards provides the possibility of grouping objects within the Heatmap by other related object types, like in the following screenshot showing Virtual Machines grouped by their respective vSphere Cluster. Problem description This is a great feature but the grouping works only using object …

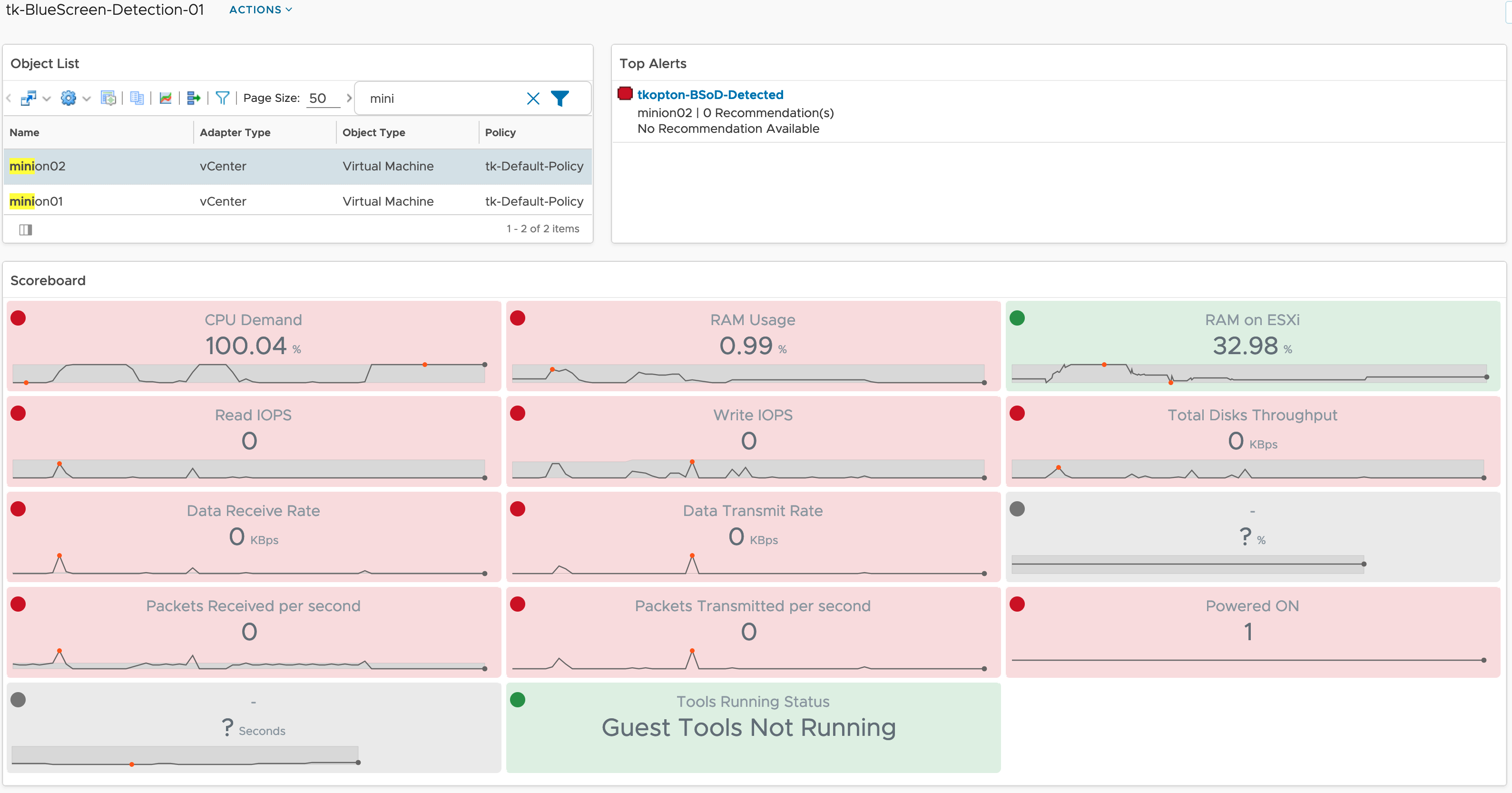

How to detect Windows Blue Screen of Death using VMware Aria Operations

Problem statement Recently I was asked by a customer what would be the best way to get alerted by VMware Aria Operations when a Windows VM stopped because of a Blue Screen of Death (BSoD) or a Linux machine suddenly quit working due to a Kernel Panic. Even if it looks like a piece of …