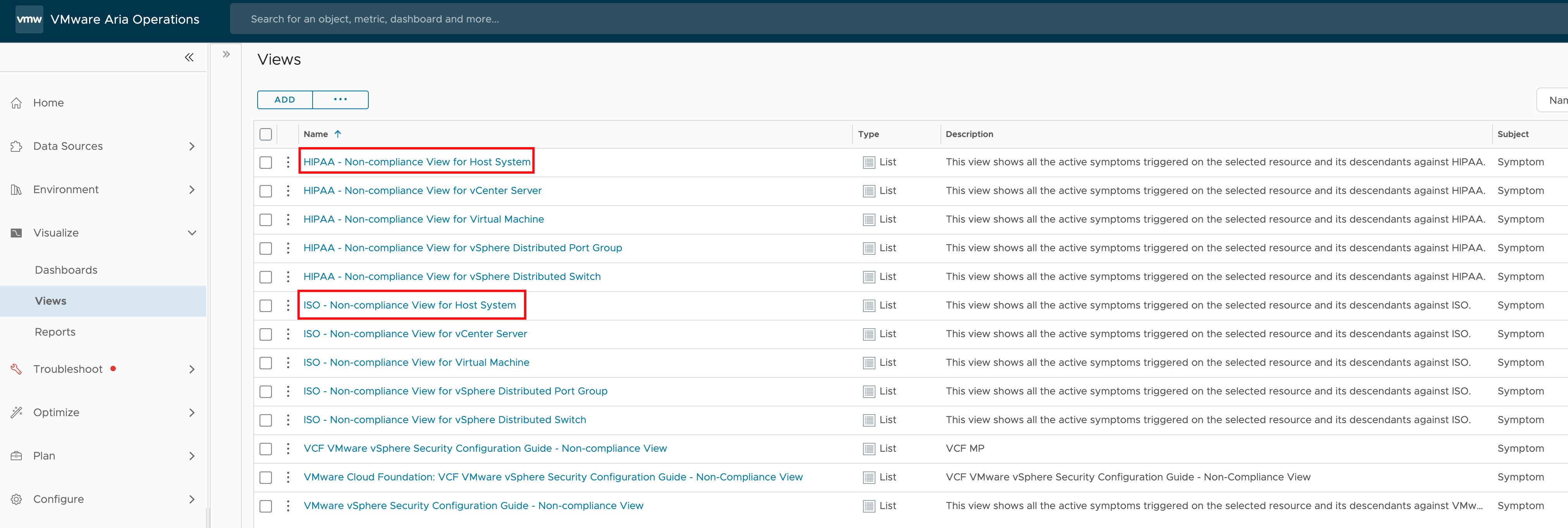

Recently, I was asked if there’s a way to programmatically read the results of a compliance check for specific ESXi hosts in VMware Aria Operations. The use case here is that the customer wants to utilize these compliance results in an automated workflow. This is an interesting question that highlights the growing need for automation …

Tag: REST

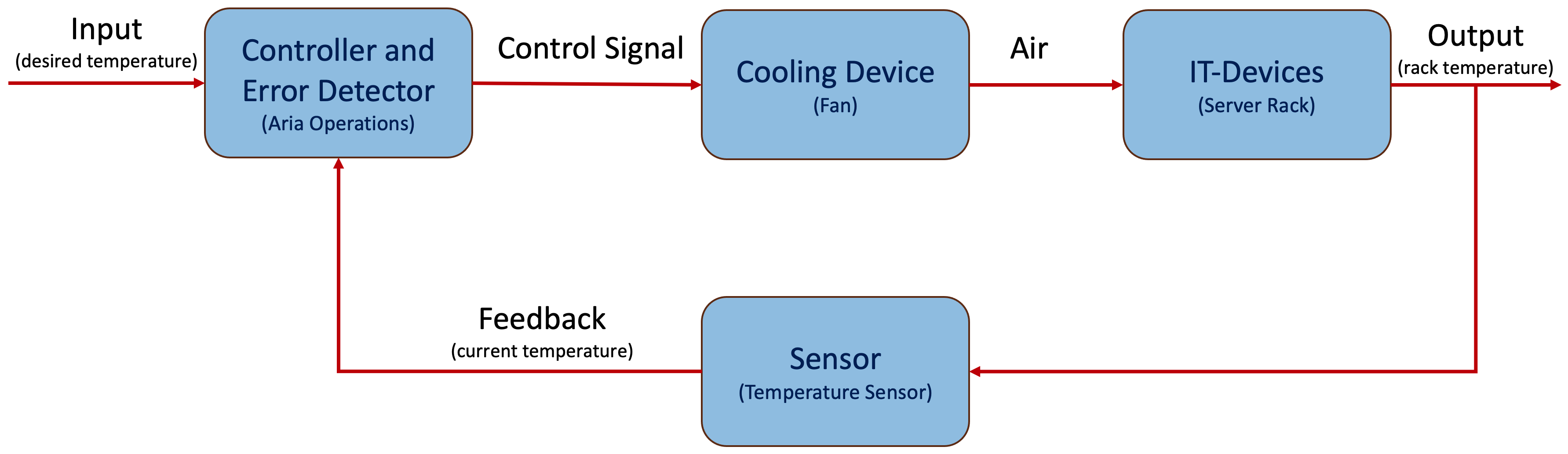

Effortless Energy Savings – Air Conditioning Control with VMware Aria Operations

In a series of recent blog posts, I’ve delved into the fascinating realm of VMware Aria Operations, uncovering its remarkable capabilities in analyzing energy consumption, energy costs, and carbon emissions attributed to diverse elements within a Software Defined Data Center (SDDC). Beyond just elucidating these features, I’ve also spotlighted the seamless integration of Aria Operations …

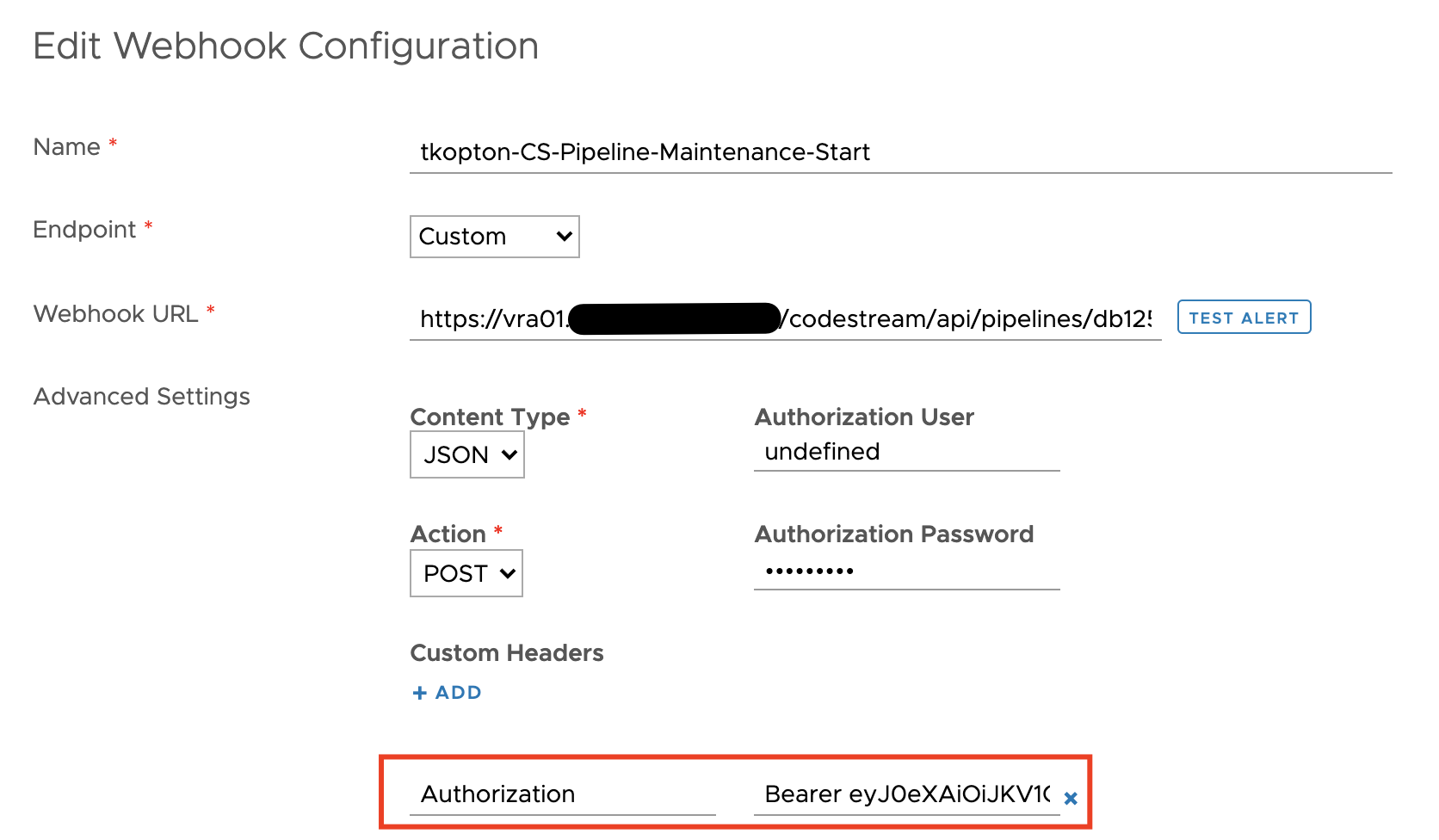

Quick Tip – Programmatically Update vRealize Log Insight Webhook Token

The Webhook feature in vRealize Log Insight is a great way to execute automation tasks, push elements in a RabbitMQ message queue or start any other REST operation. Many endpoints providing such REST methods require a token-based authentication, like the well-known Bearer Token. vRealize Automation is one example of such endpoints. It is pretty easy …

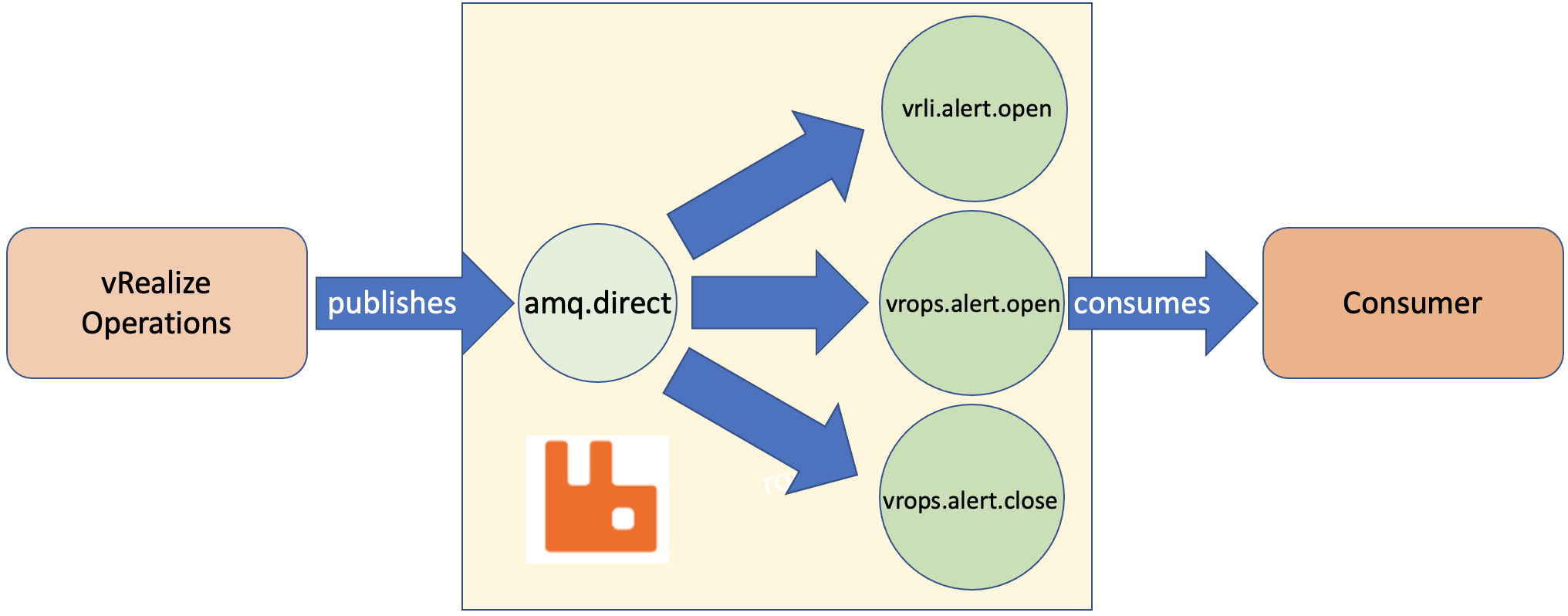

vRealize Operations AMQP Integration using Webhooks

With the version 8.4 vRealize Operations introduced the Webhook Outbound Plugin feature. This new Webhook outbound plugin works without any additional software, the Webhook Shim server becomes obsolet. In this post, I will explain how to integrate vRealize Operations with an AMQP system. For this exercise, I have deployed a RabbitMQ server but the concept …

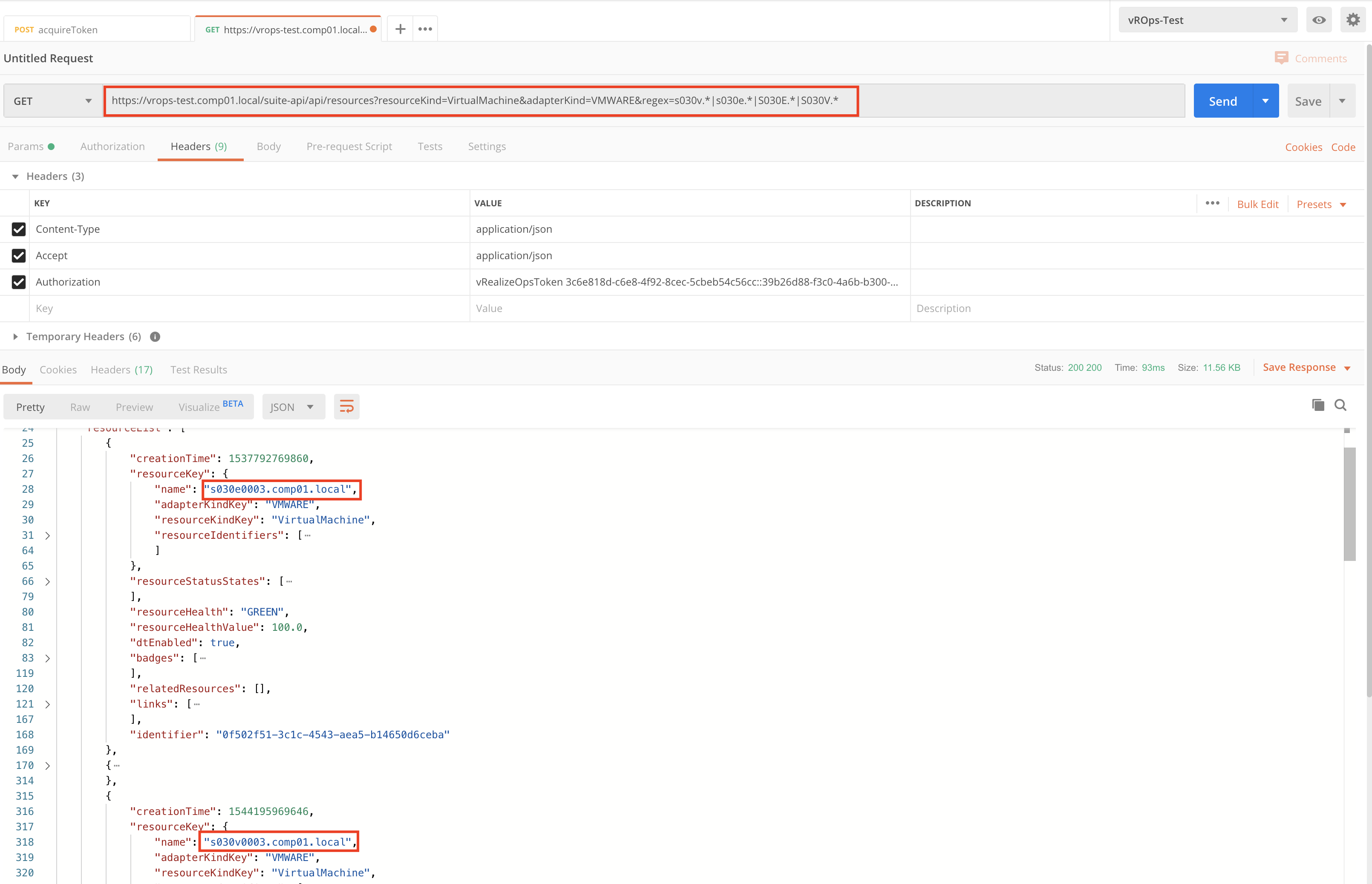

Quick Tip – vROps REST API requests using RegEx expressions

The vRealize Operations REST API allows using RegEx expressions in various GET methods. Sometimes it is not clear how to use the expressions. Here a very simple example of using RegEx to retrieve vROps Virtual Machine objects, which have VM names starting with certain strings. Encoded URL – example: https://vrops-test.comp01.local/suite-api/api/resources?resourceKind=VirtualMachine&adapterKind=VMWARE®ex=s030v.*%7Cs030e.*%7CS030E.* Example in Postman (of course, …

Maintenance Mode for vRealize Operations Objects, Part 1

In this and following posts I will show you few different ways of putting vROps objects into maintenance. Objects in vROps – short intro The method used to mark an object as being in the maintenances state depends on the actual use case. As usually, the use case itself is being defined by: Requirements – …

Adding custom objects to vRealize Operations with the REST API and vRealize Orchestrator workflows

In a recent blog post “Adding Custom Metrics and Properties to vRealize Operations Objects with the REST API” I have described how to add additional metrics and properties to existing objects in vRealize Operations using the REST API and Postman. But what if you would like to use the outstanding vRealize Operations engine to manage …