When using VMware Aria Operations, integrating telegraf can significantly enhance your monitoring capabilities, provide more extensive and customizable data collection, and help ensure the performance, availability, and efficiency of your infrastructure and applications. Utilizing the telegraf agent you can run custom scripts in the end point VM and collect custom data which can then be …

Category: Content

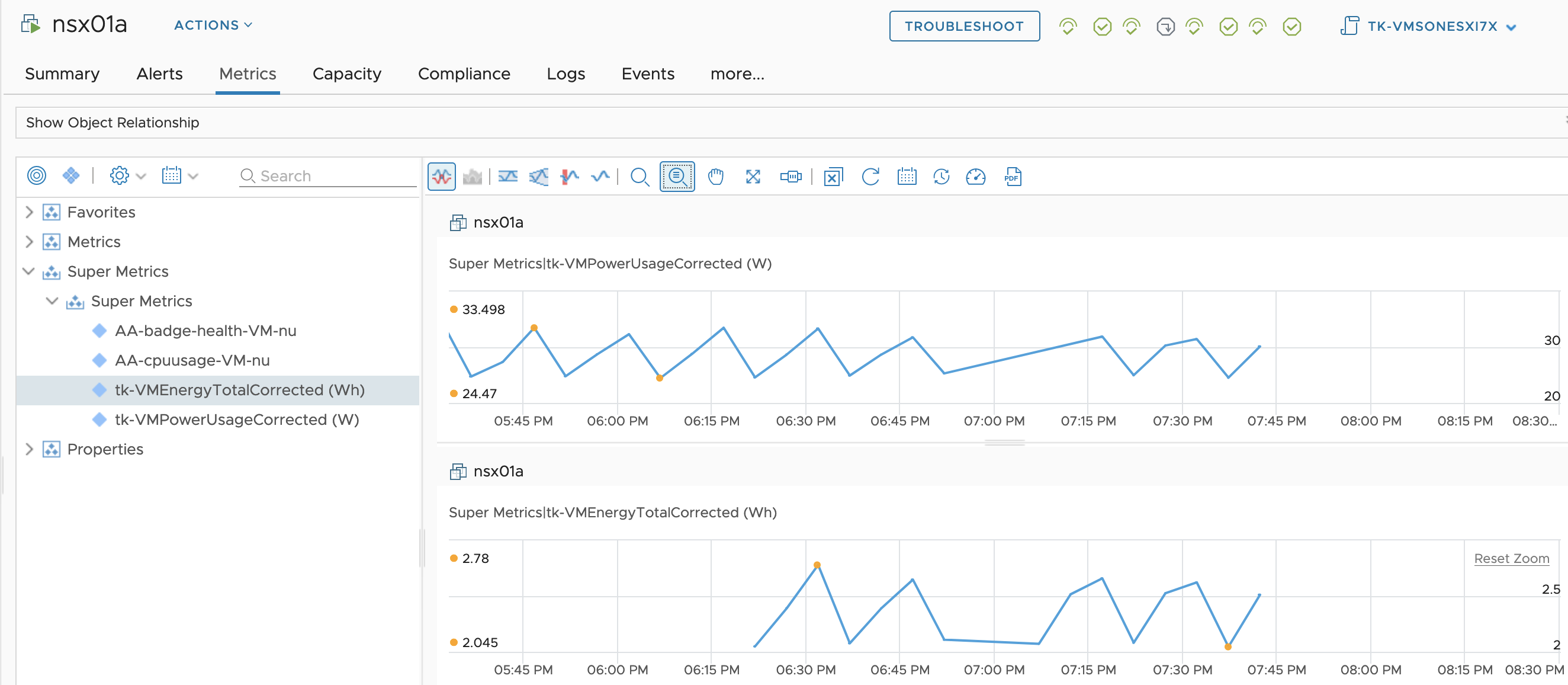

Fixing “Virtual Machine Power Metrics Display in mW” using Aria Operations

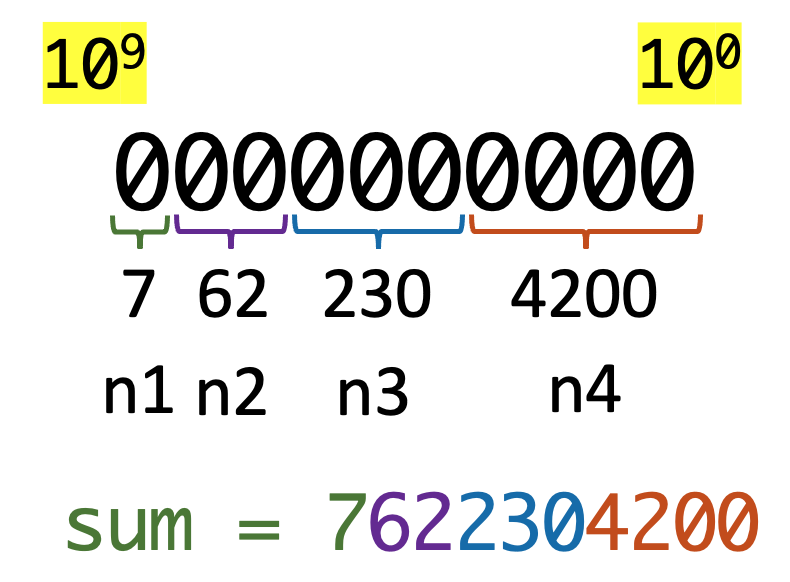

In the VMware Aria Operations 8.6 (previously known as vRealize Operations), VMware introduced pioneering sustainability dashboards designed to display the amount of carbon emissions conserved through compute virtualization. Additionally, these dashboards offer insights into reducing the carbon footprint by identifying and optimizing idle workloads. This progress was taken even further with the introduction of Sustainability …

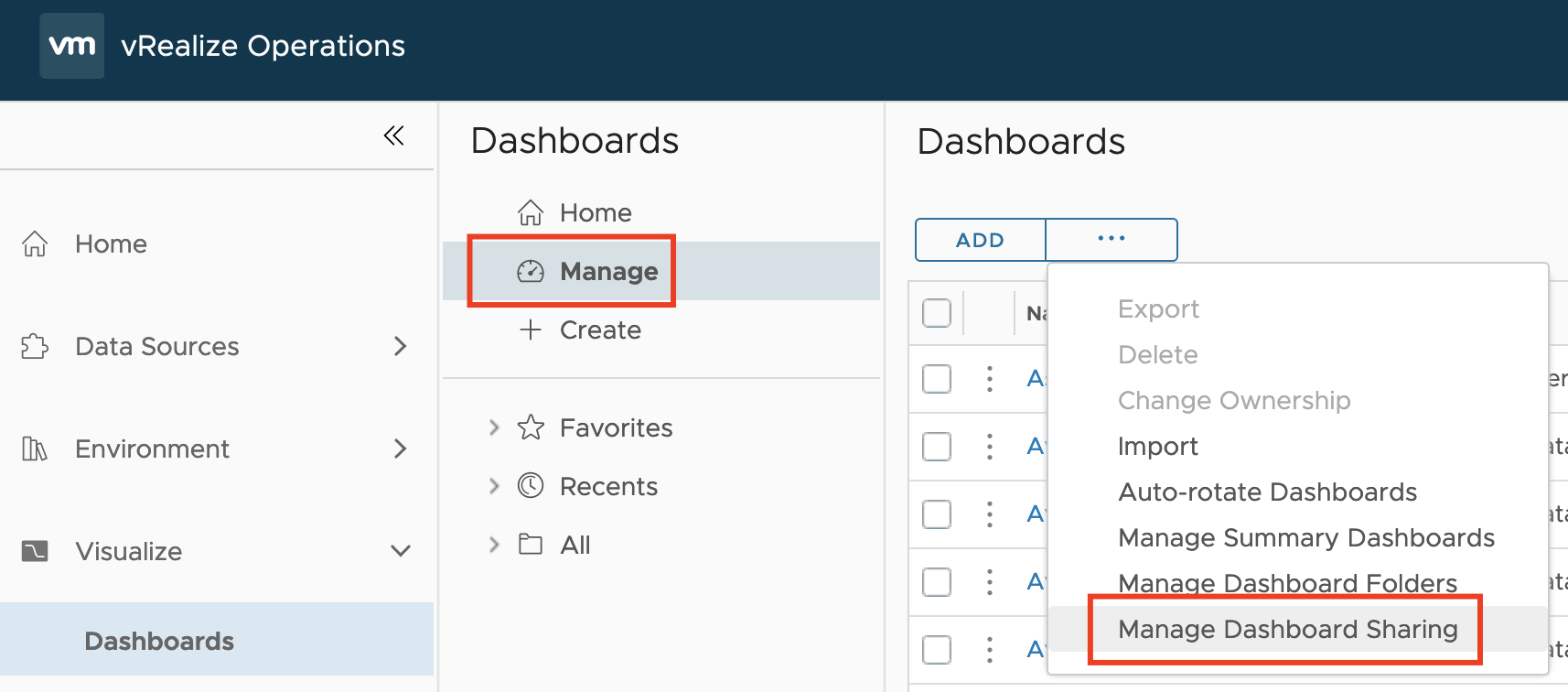

VMware Explore Follow-up 2 – Aria Operations Dashboard Permissions Management

Another question I was asked during my “Meet the Expert – Creating Custom Dashboards” session which I could not answer due to the limited time was: “How to manage access permissions to Aria Operations Dashboards in a way that will allow only specific group of content admins to edit only specific group of dashboards?“ Even …

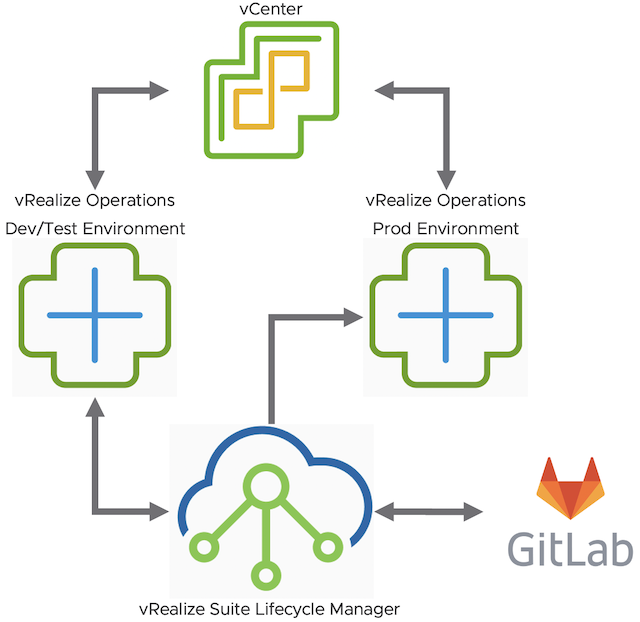

vRealize Operations Content Management – CD Pipeline – Part 1

vRealize Operations provide a wide range of content OOB. It gives the Ops teams a variety of dashboards, view, alerts etc. to run and manage their environments. Sooner or later, in most cases rather sooner than later, vROps users will create their own content. It might be completely new dashboards or maybe just adjusted alert …