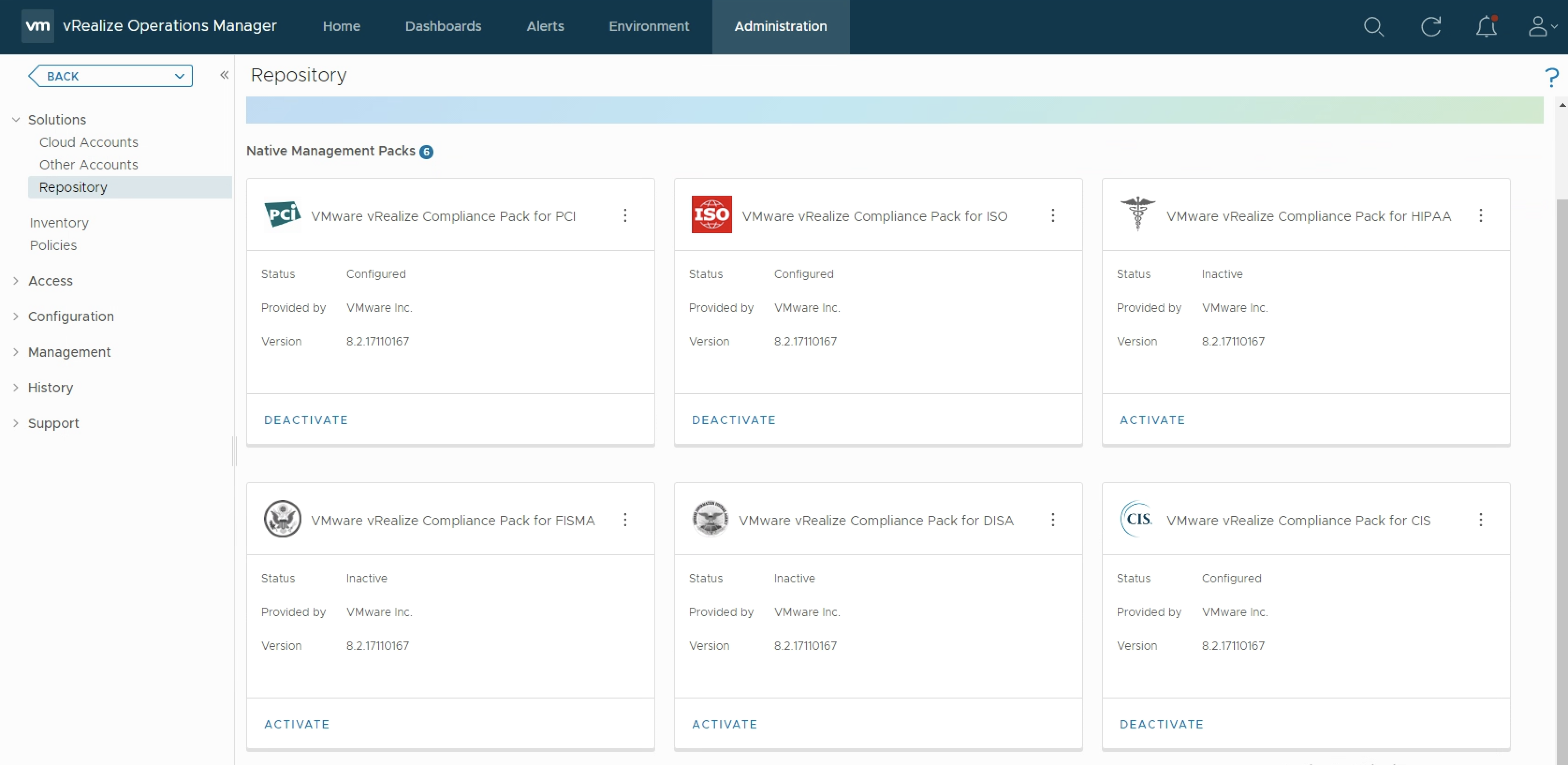

As you probably know vRealize Operations provides several Compliance Packs basically out-of-the-box (“natively”). A simple click on “ACTIVATE” in the “Repository” tab installs all needed components of the Compliance Pack and allows the corresponding regulatory benchmarks to be executed. “Regulatory benchmarks provide solutions for industry standard regulatory compliance requirements to enforce and report on the …

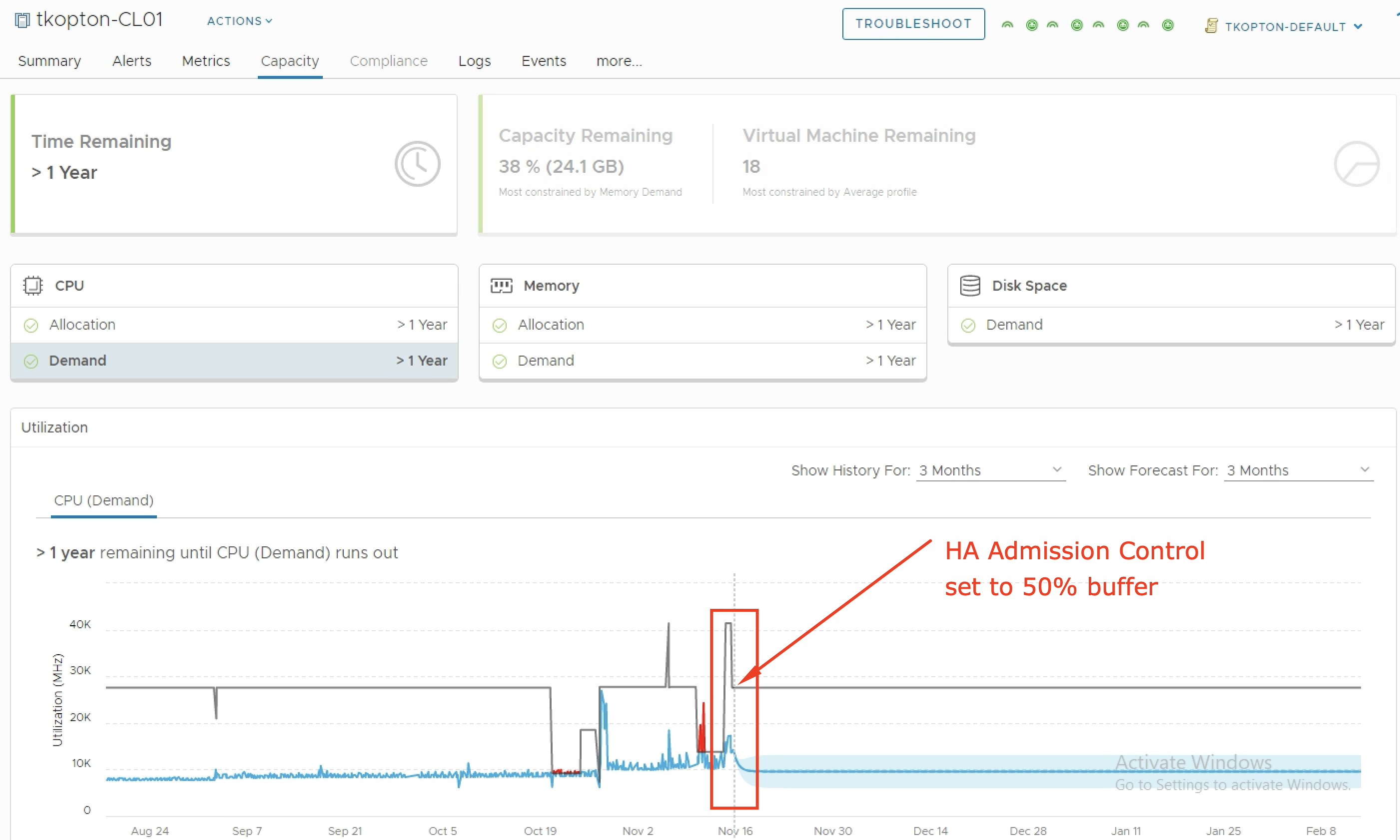

Capacity Management for n+1 and n*2 Clusters using vRealize Operations

When it comes to capacity management in vSphere environments using vRealize Operations customers are frequently asking for guidelines how to setup vROps to properly manage n+1 and n*2 ESXi clusters. Just as a short reminder, n+1 in context of a ESXi cluster means that we are tolerating (and are hopefully prepared for) the failure of …

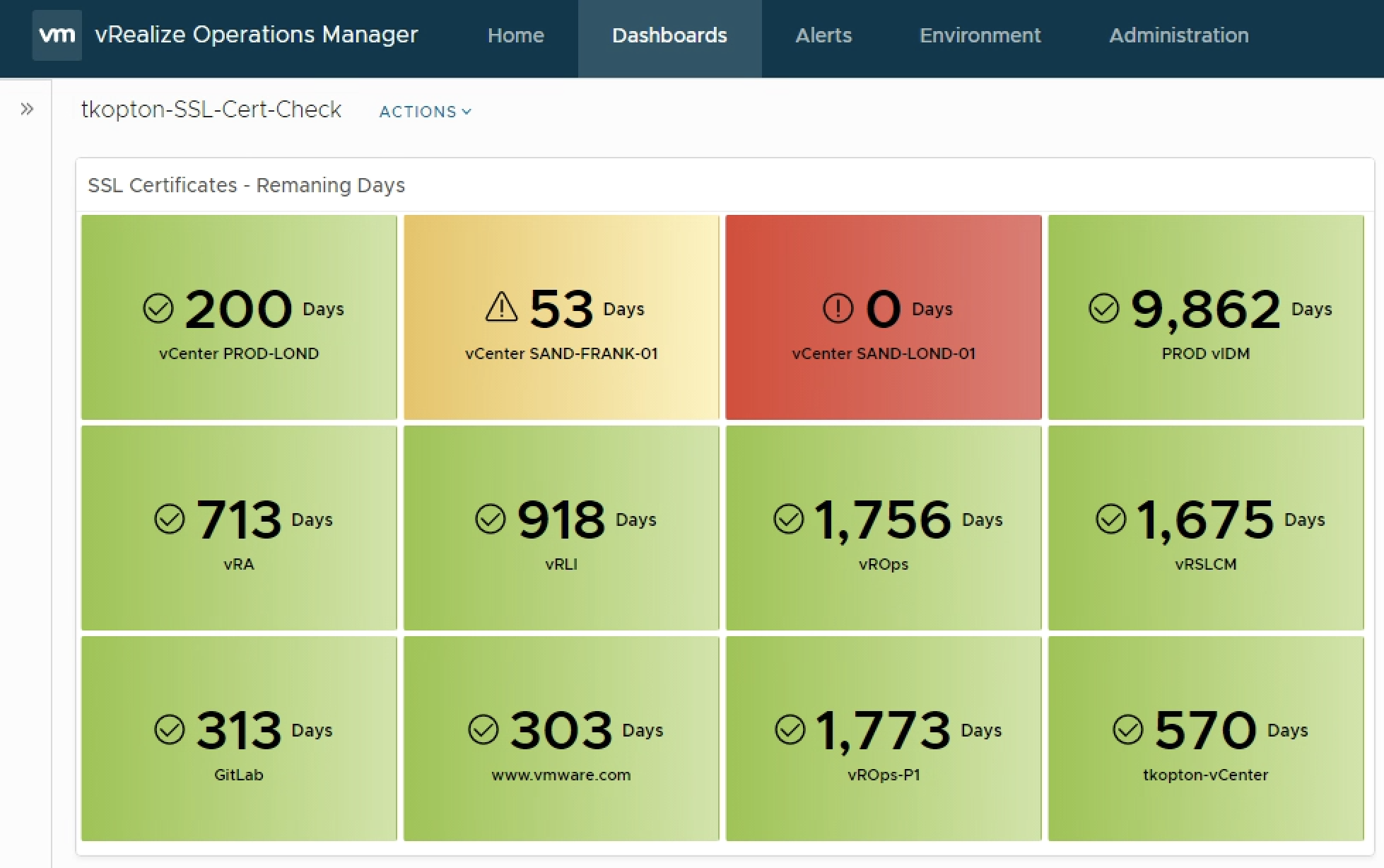

Checking SSL/TLS Certificate Validity Period using vRealize Operations Application Monitoring Agents

In my 2019 article “Checking SSL/TLS Certificate Validity Period using vRealize Operations and End Point Operations Agent” on VMware Cloud Management Blog (https://blogs.vmware.com/management/2019/05/checking-ssl-tls-certificate-validity-period-using-vrealize-operations-and-end-point-operations-agent.html) I have described how to check the remaining validity of SSL/TLS certificates. The method back then was to utilize the End Point Operations Agents. Since vRealize Operations 7.5 new Application Monitoring capabilities …

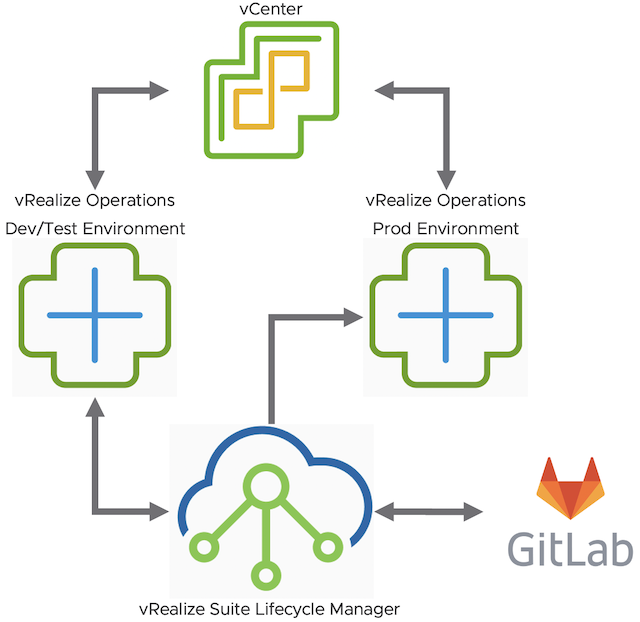

vRealize Operations Content Management – CD Pipeline – Part 1

vRealize Operations provide a wide range of content OOB. It gives the Ops teams a variety of dashboards, view, alerts etc. to run and manage their environments. Sooner or later, in most cases rather sooner than later, vROps users will create their own content. It might be completely new dashboards or maybe just adjusted alert …

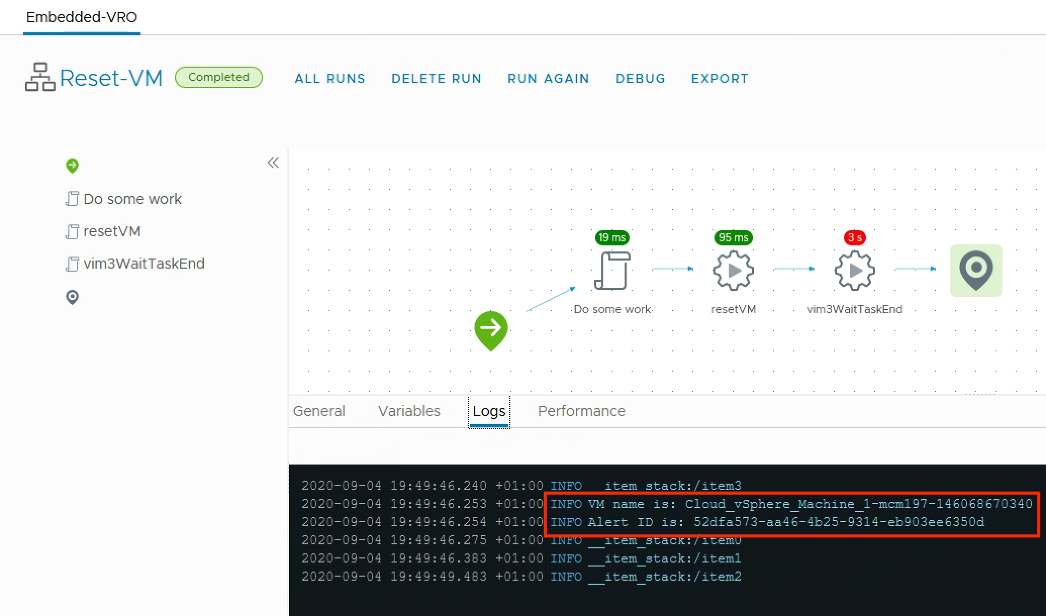

Self-Healing with vRealize Operations and vRealize Orchestrator

The vRealize Operations Management Pack for vRealize Orchestrator provides the ability to execute vRO workflows as part of the alerting and remediation process in vROps. The vRO workflows can be executed manually or automatically. With this solution it is easy to implement sophisticated self-healing workflows for your vROps managed environment. In this blog post I …

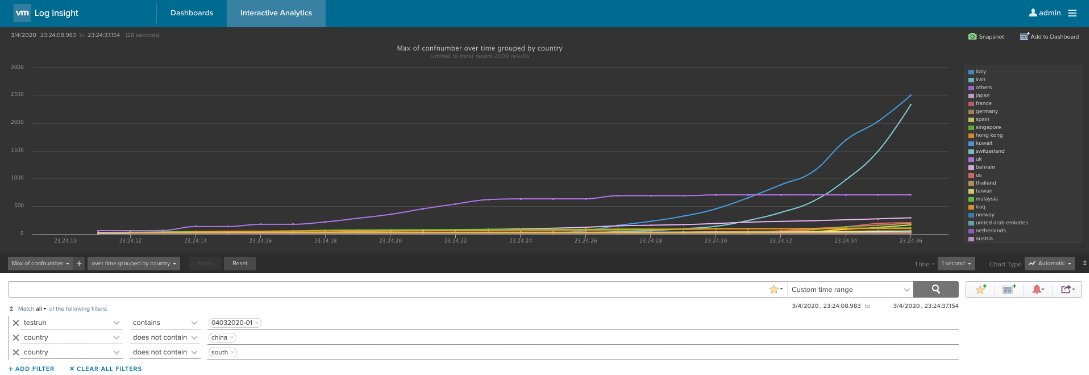

vRealize Operations and Logging via CFAPI and Syslog

Without any doubt configuring vRealize Operations to send log messages to a vRealize Log Insight instance is the best way to collect, parse and display structured and structured log information. In this post I will explain the major differences between CFAPI and Syslog as the protocol used to forward log messages to a log server …

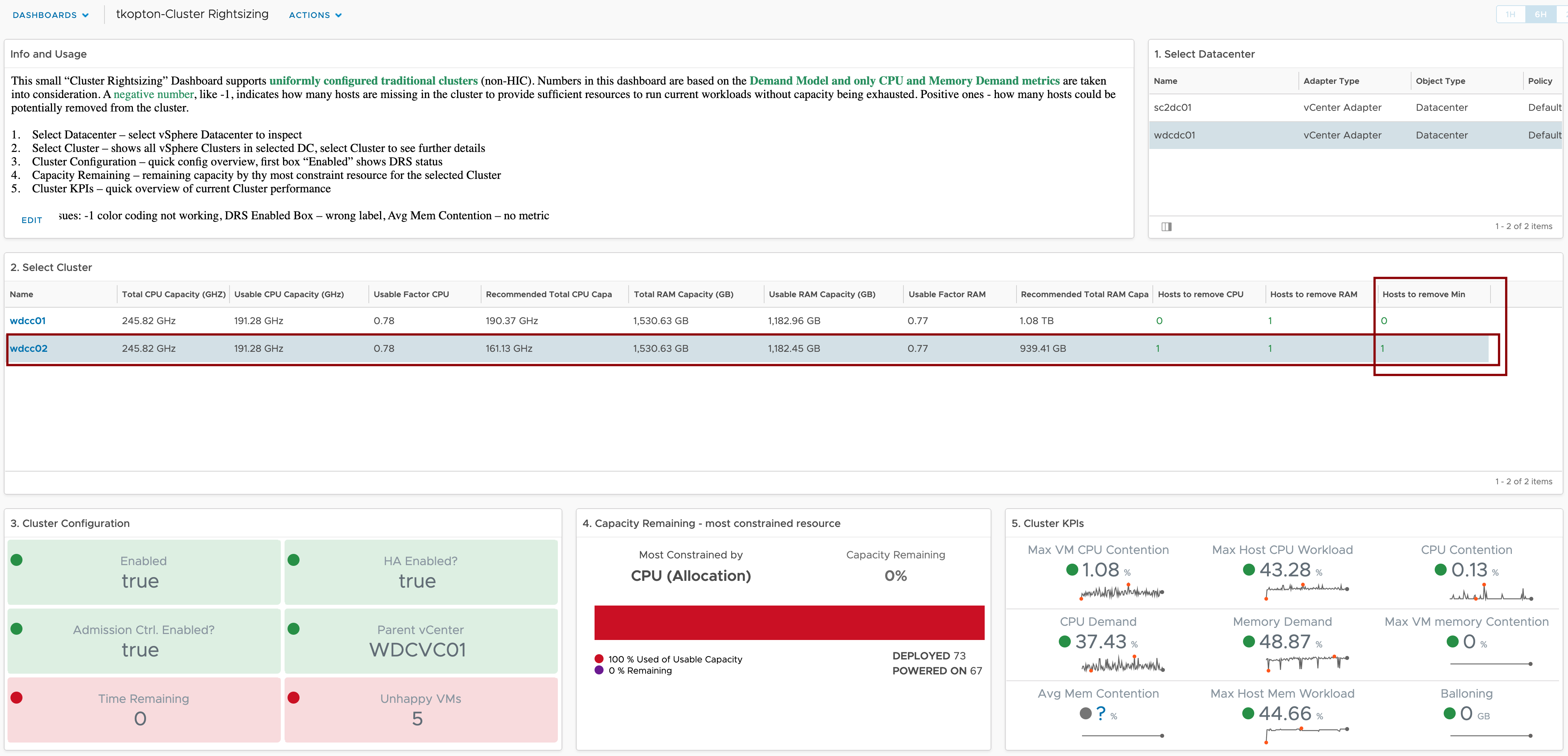

ESXi Cluster (non-HCI) Rightsizing using vRealize Operations

vRealize Operations with its four main pillars: Optimize Performance Optimize Capacity Troubleshoot Manage Configuration provides a perfect solution to manage complex SDDC environments. The “Optimize Performance” part of vRealize Operations provides a wide range of features like workload optimization to ensure consistent performance in your datacenters or VM rightsizing to reduce bottlenecks and ensure best …

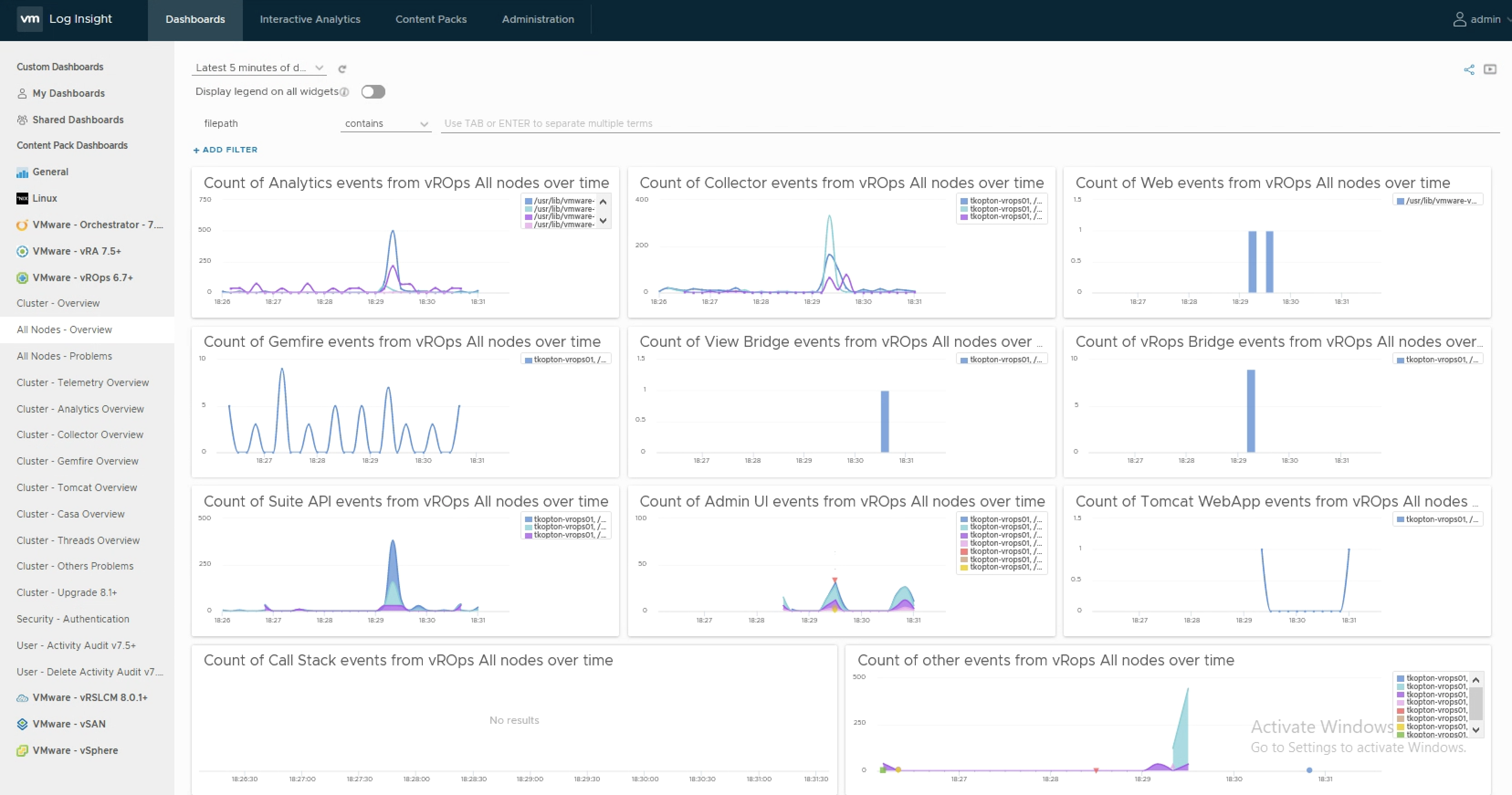

Parsing CSV Files using vRealize Log Insight

Last year I published a blog post focusing on the JSON parser available in vRealize Log Insight (https://thomas-kopton.de/vblog/?p=144) and I believe this could have been a beginning of a series – “vRLI Parsers “. In this post I will show how to configure and utilize the CSV parser using a very prominent and media-effective use …

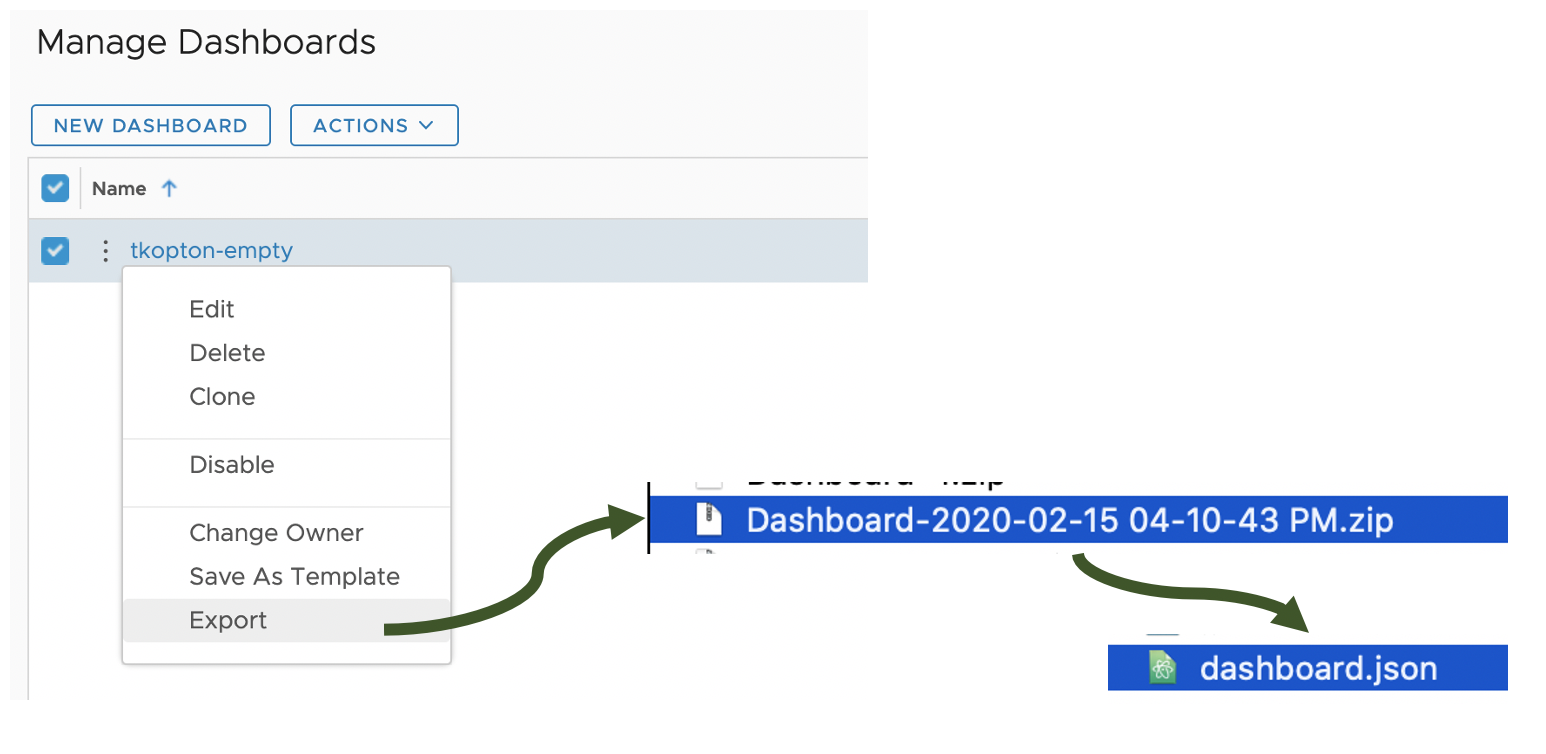

How to Merge vROps Dashboards – Anatomy of the Content

Recently I was asked if it is possible to merge two, or generally speaking n different vRealize Operations Dashboards into one Dashboard. To answer that question and provide a procedure how to do it we need to inspect the internal structure of a vROps Dashboard. Anatomy of a vRealize Operations Dashboard As everyone knows vROps …

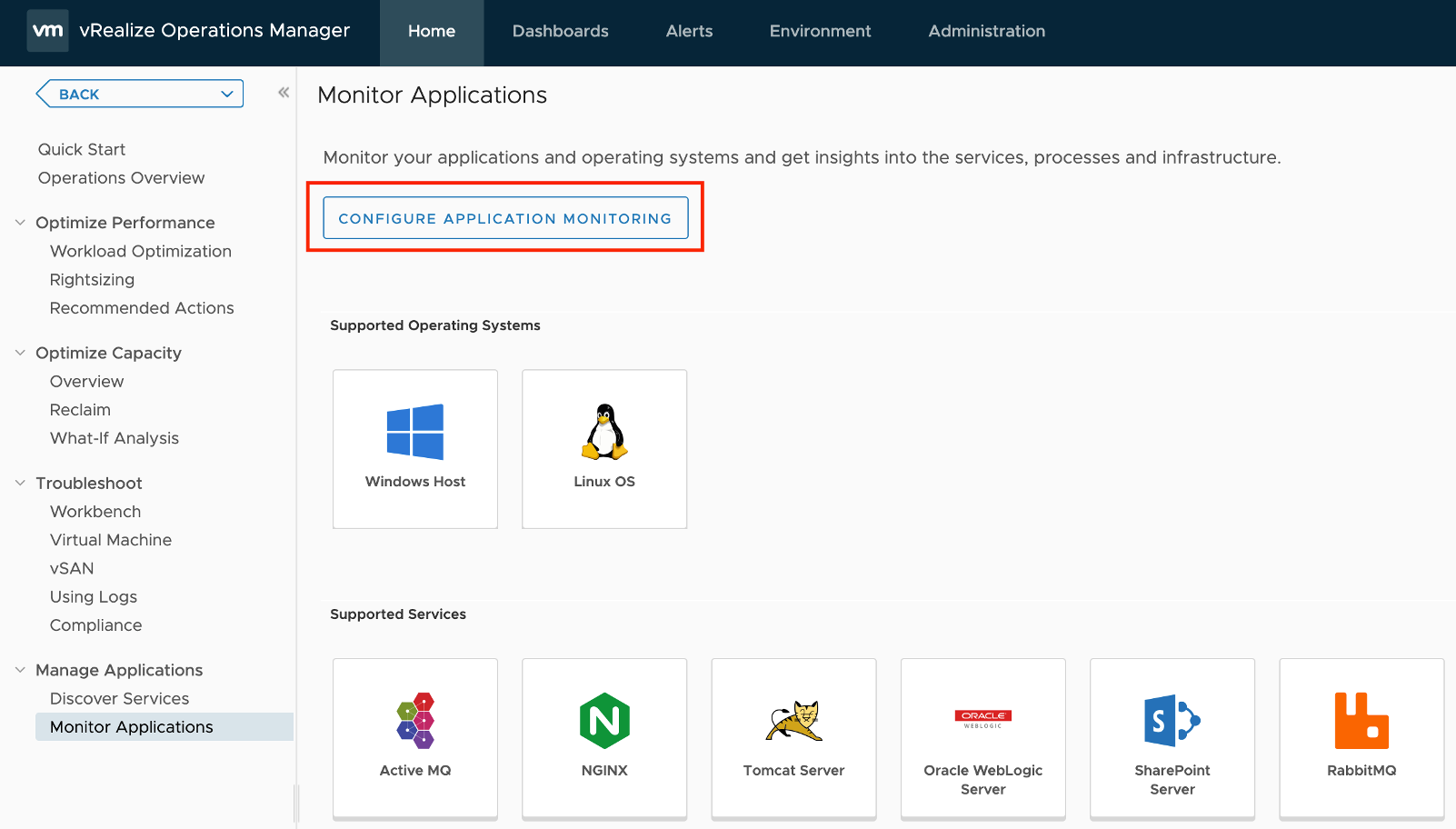

How to Configure Applications Monitoring in vROps 8.0

Applications Monitoring is an exciting functionality in vRealize Operations. To start monitoring one of the twenty services currently supported by vROps, the vRealize Operations Application Remote Collector (ARC) needs to be deployed and configured in vROps. The Application Remote Collector is provided as OVA and the deployment process runs similarly to any other VMware OVA …