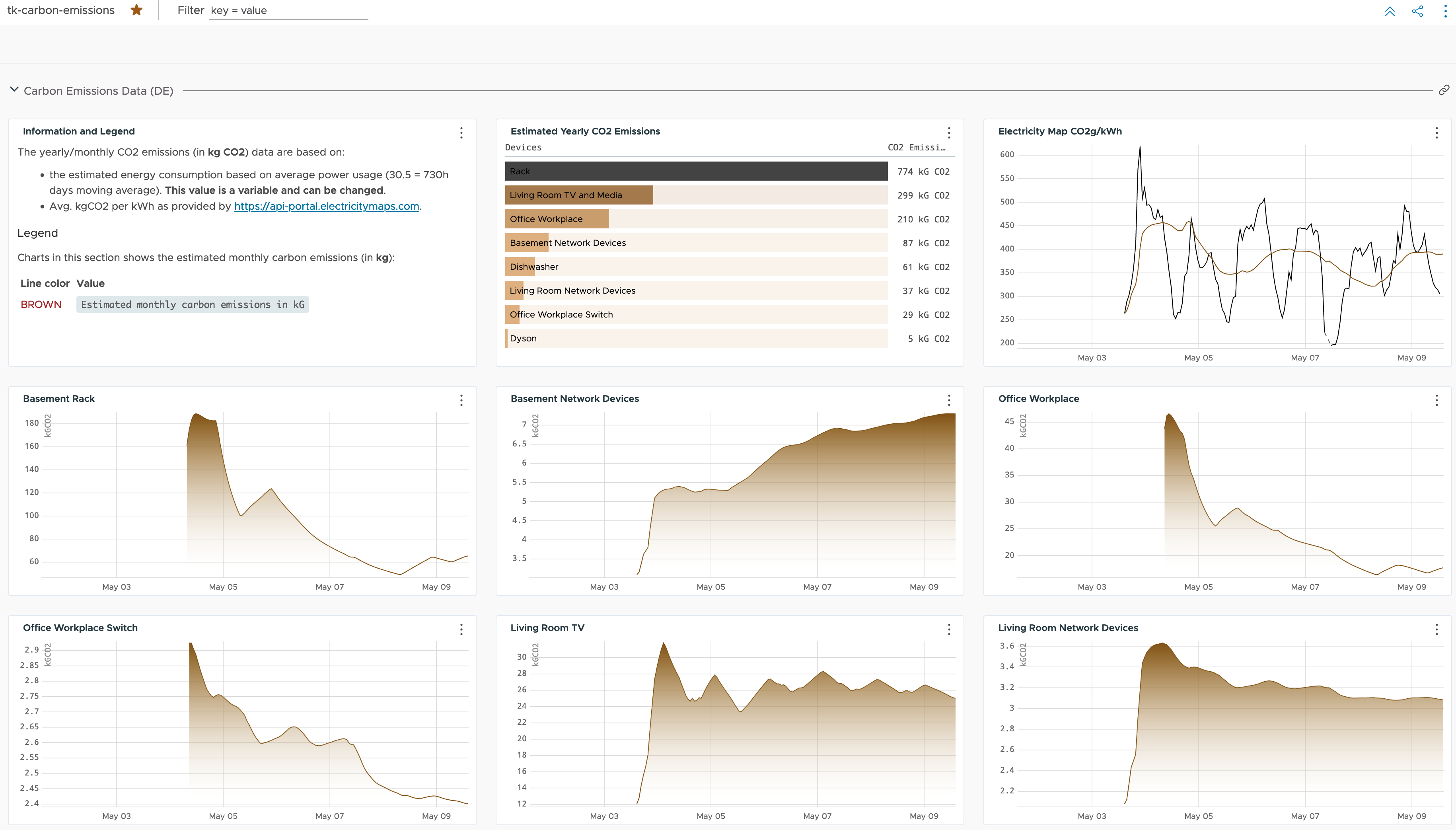

My last posts focused on sustainability and how VMware Aria Operations can help get more insights into energy consumption, infrastructure efficiency and how to improve operations and make the virtual infrastructure more sustainable. In this post I will describe how I used an old Raspberry Pi, a DHT22 sensors, few Shelly Plug S smart plugs …

VMware Aria Operations Near Real-Time Monitoring Option and Power Metrics

One of the advantaged of VMware Aria Operations Cloud over the on-premises option is the availability of Near Real-Time Monitoring for the most important vSphere metrics. This is especially very helpful when troubleshooting short-lived issues. However, this option brings with it a small challenge, especially if some of such near real-time metrics are later used …

Enhancing Sustainability Data in VMware Aria Operations using REST API and Automation

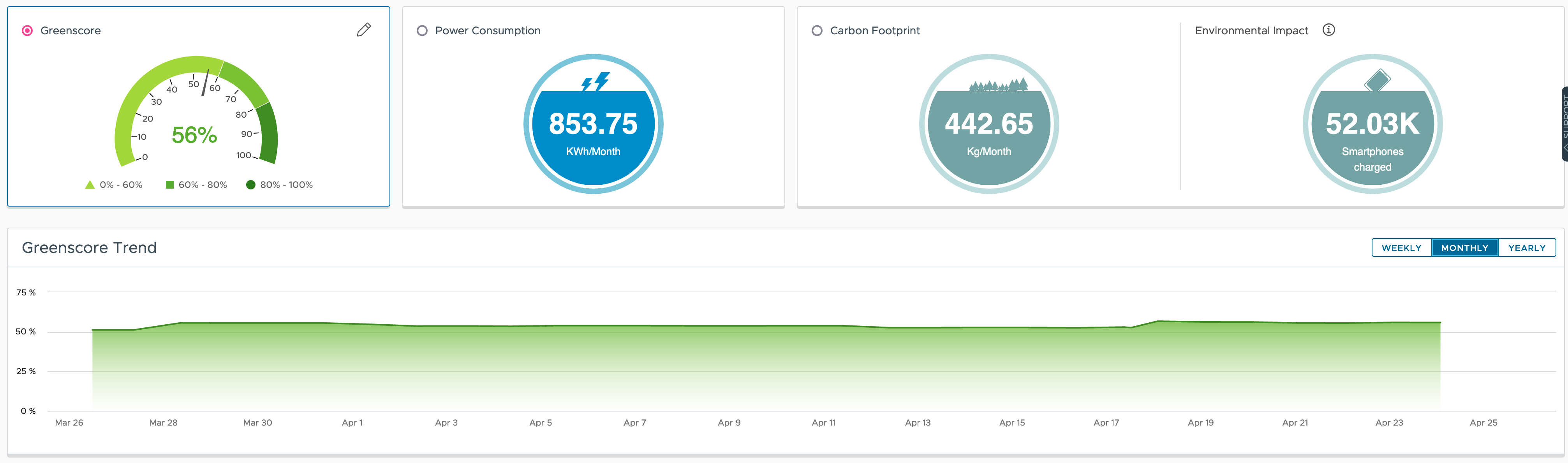

Overview of OOTB Sustainability With its VMware Greenscore VMware Aria Operations provides a great way of not only showing the effects of your current efforts toward more sustainable operations, it also offers multiple approaches which will help organization improve their operational efficiency, save money and reduce carbon emissions. Major use cases possible OOTB With the …

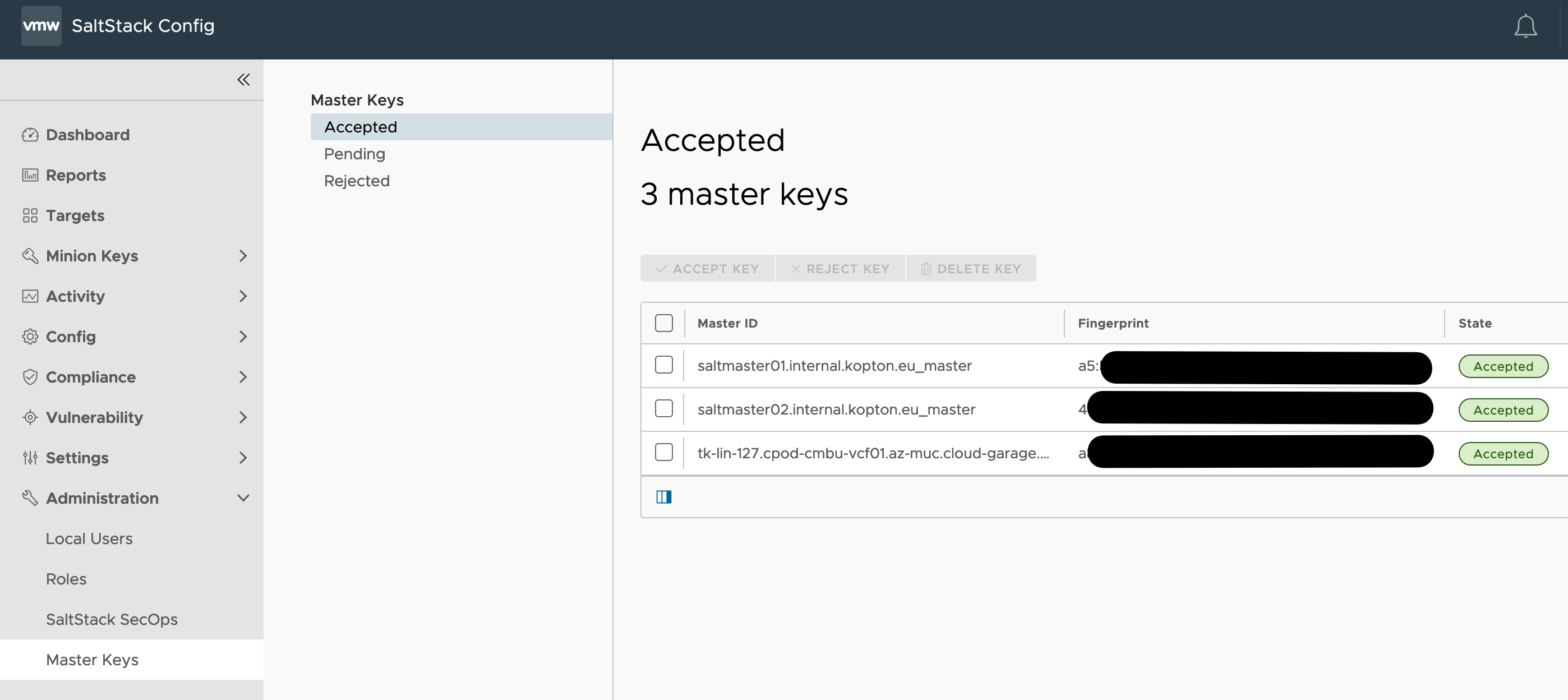

How to provision local SaltStack Master to work with VMware Aria Automation Config Cloud (aka SaltStack Config)

Recently I had to install and configure a SaltStack Master in my home lab and connect this master to my Aria Automation Config Cloud (aka SaltStack Config, i will use both names in this post) instance. Even if the official documentation improved a lot, there are still some pitfalls, especially if you are not experienced …

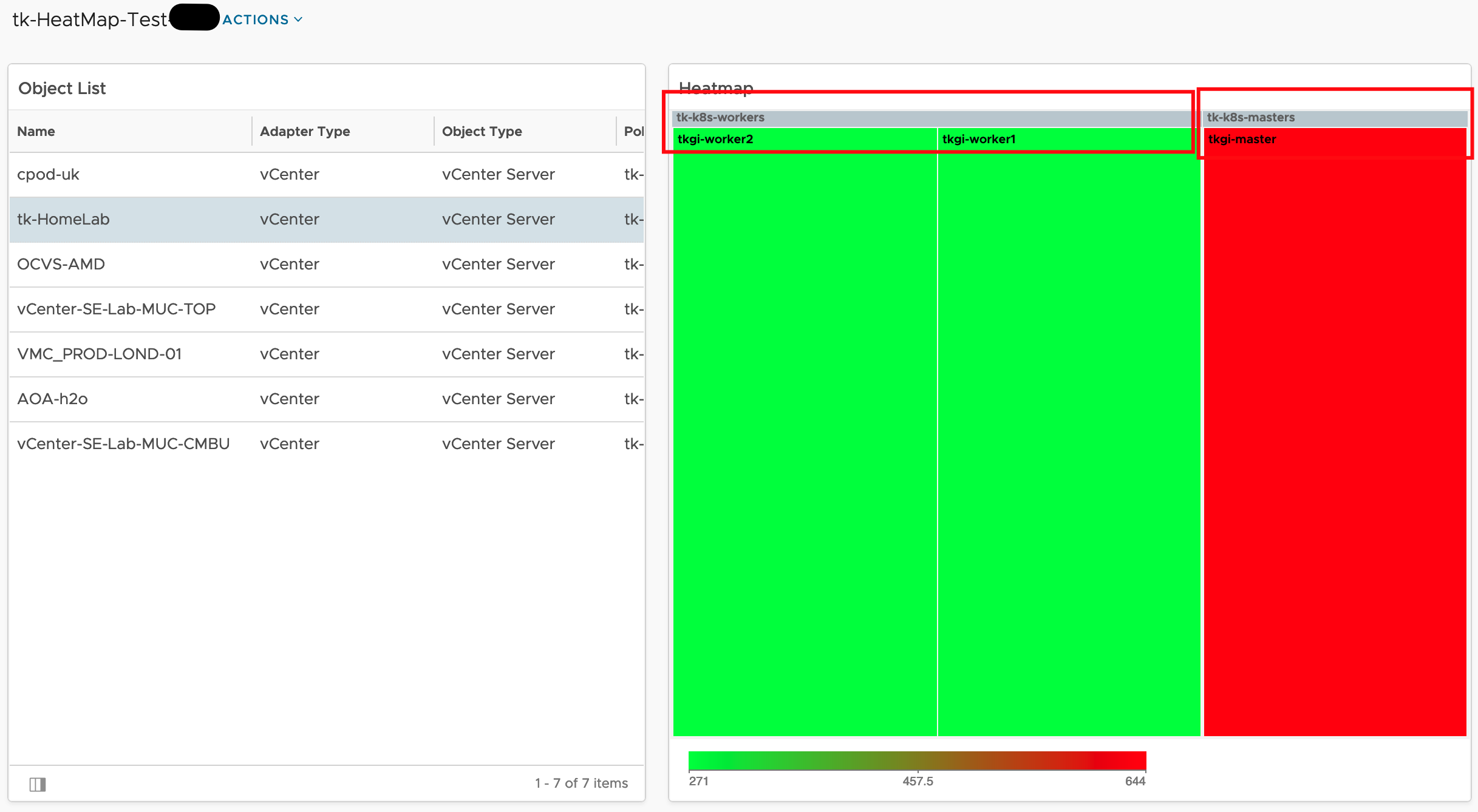

Aria Operations Heatmap Widget meets Custom Groups – Grouping by Property

The Aria Operations Heatmap Widget which is very often used in many Dashboards provides the possibility of grouping objects within the Heatmap by other related object types, like in the following screenshot showing Virtual Machines grouped by their respective vSphere Cluster. Problem description This is a great feature but the grouping works only using object …

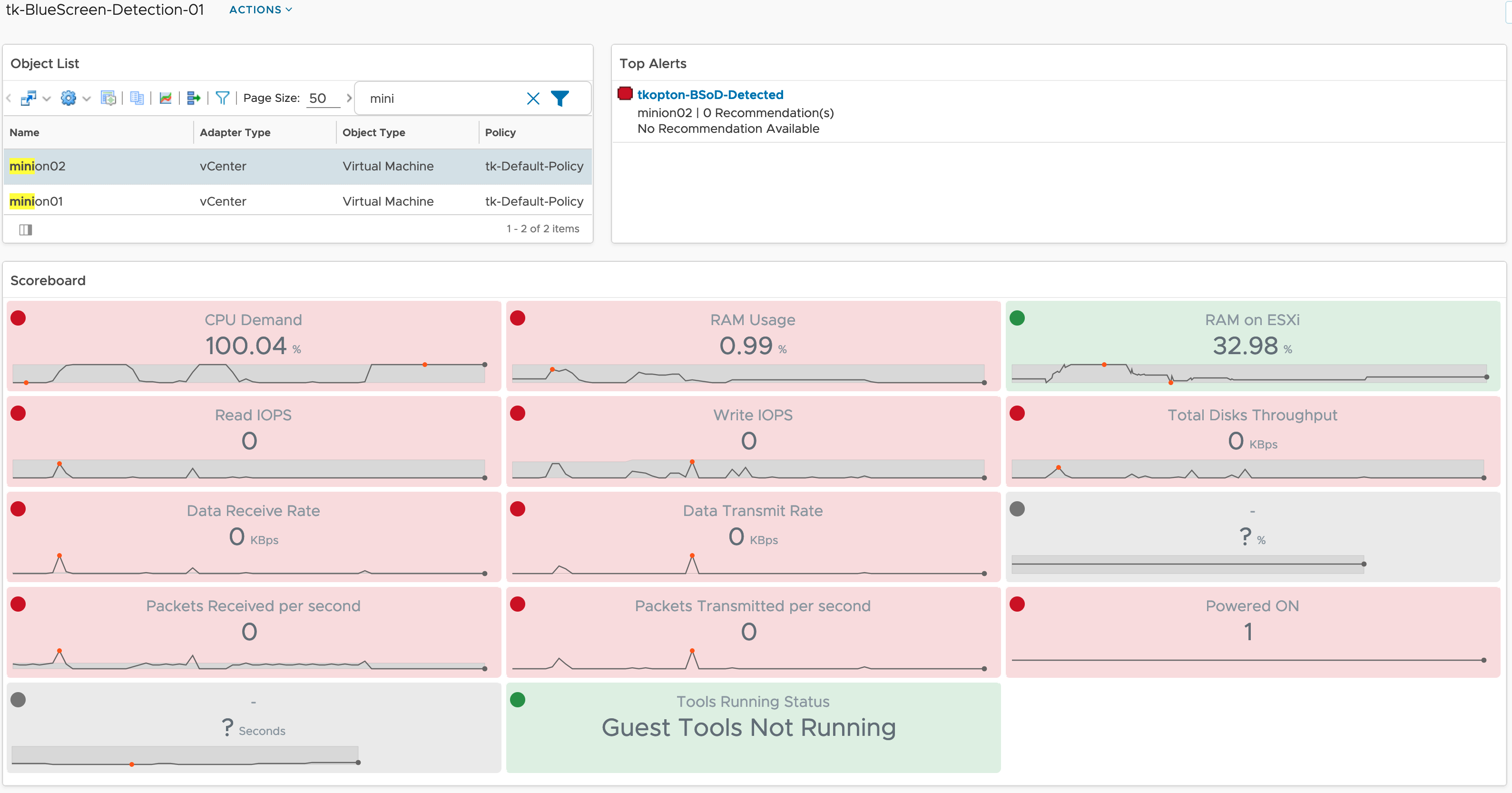

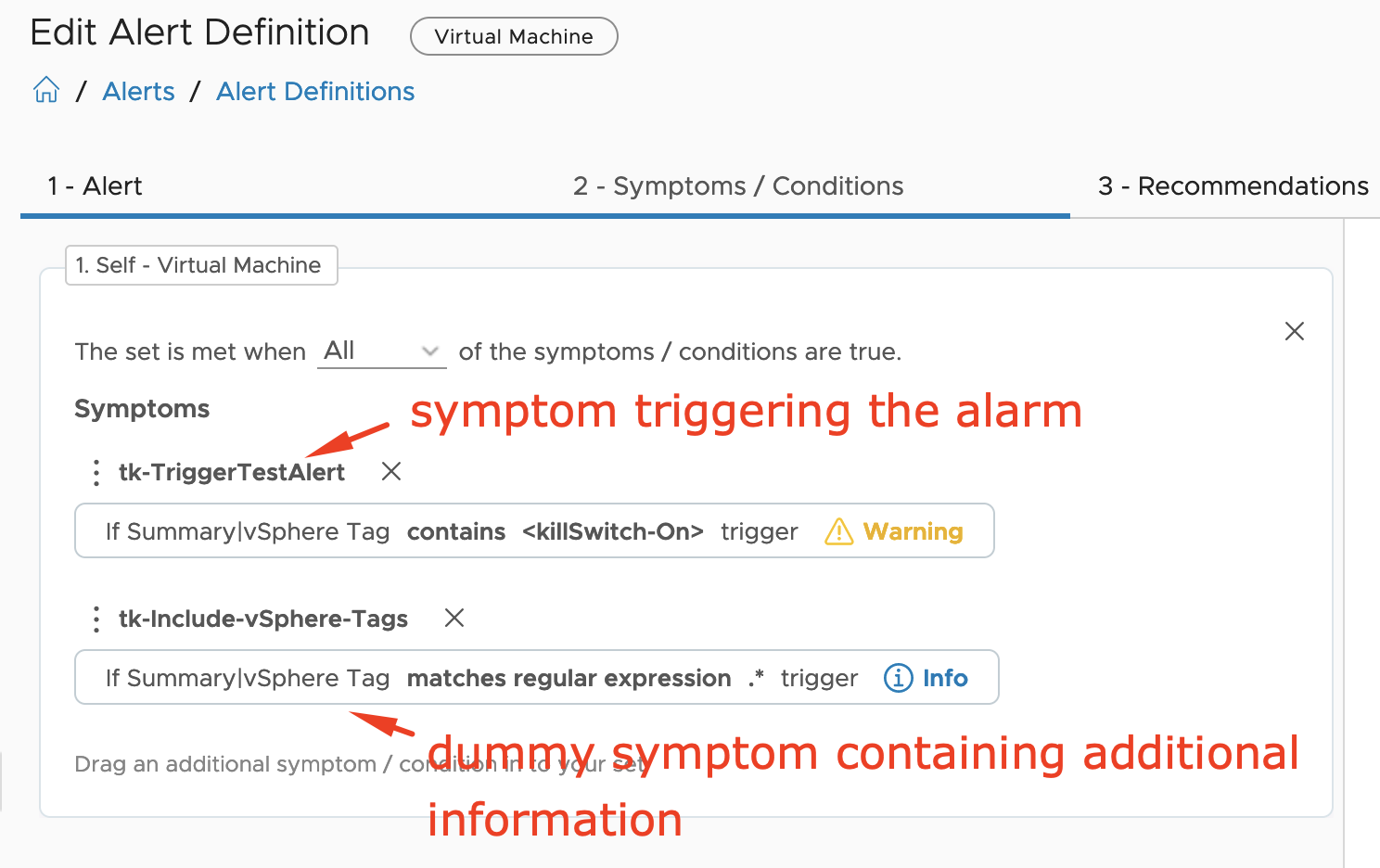

How to detect Windows Blue Screen of Death using VMware Aria Operations

Problem statement Recently I was asked by a customer what would be the best way to get alerted by VMware Aria Operations when a Windows VM stopped because of a Blue Screen of Death (BSoD) or a Linux machine suddenly quit working due to a Kernel Panic. Even if it looks like a piece of …

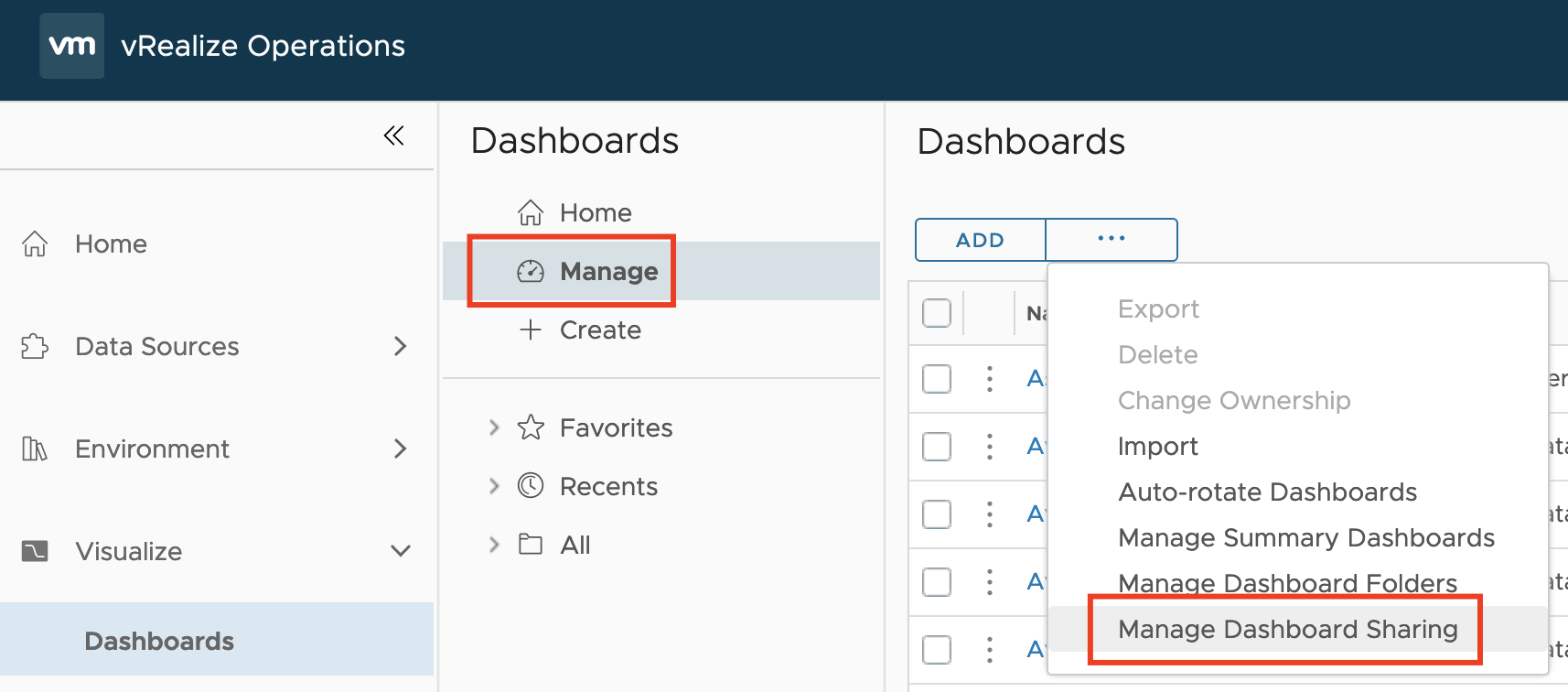

VMware Explore Follow-up 2 – Aria Operations Dashboard Permissions Management

Another question I was asked during my “Meet the Expert – Creating Custom Dashboards” session which I could not answer due to the limited time was: “How to manage access permissions to Aria Operations Dashboards in a way that will allow only specific group of content admins to edit only specific group of dashboards?“ Even …

VMware Explore Follow-up – Aria Operations and SNMP Traps

During one of my “Meet the Expert” sessions this year in Barcelona I was asked if there is an easy way to use SNMP traps as Aria Operations Notification and let the SNMP trap receiver decide what to do with the trap based on included information except for the alert definition, object type or object …

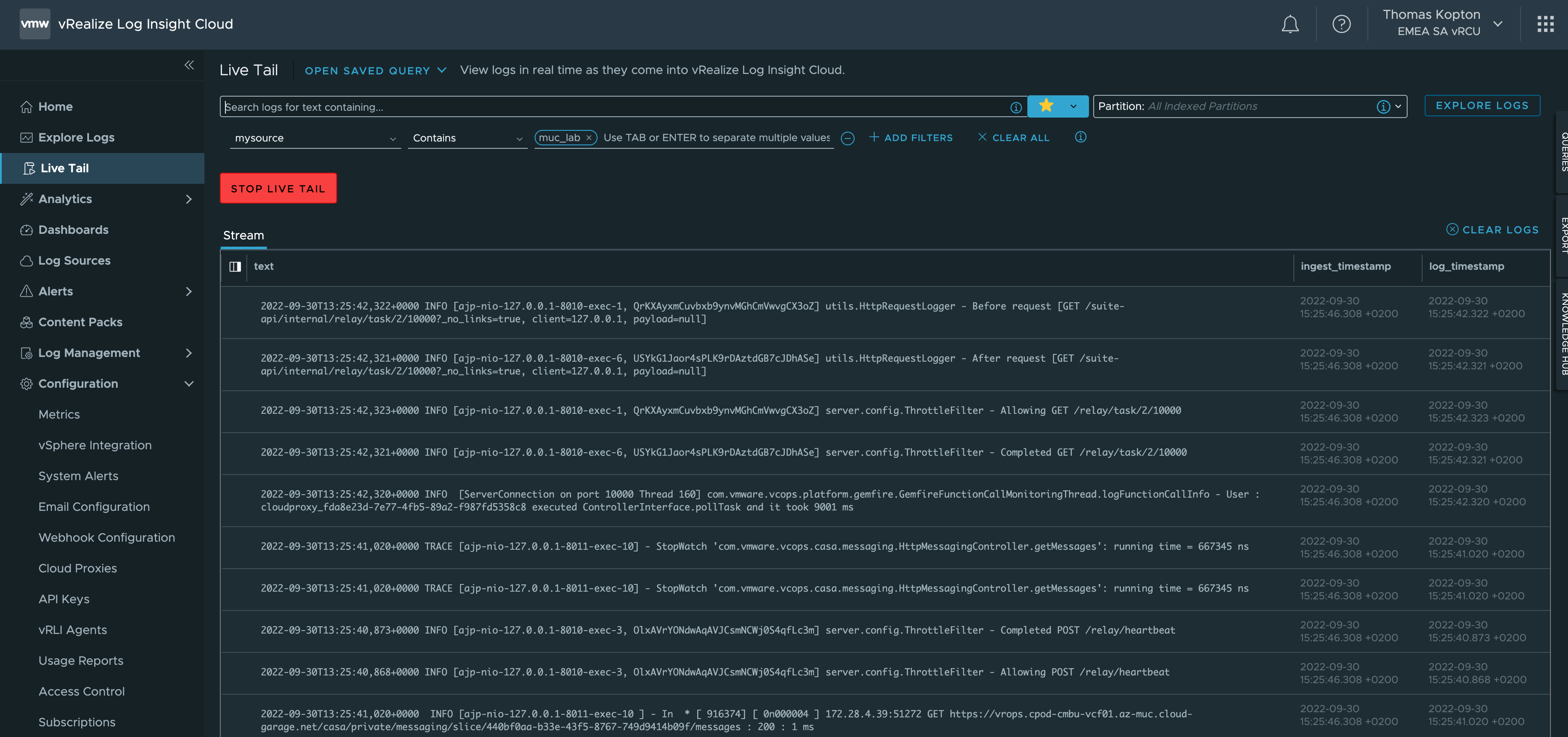

vRealize Log Insight and Direct Forwarding to Log Insight Cloud

Since the release of vRealize Log Insight 8.8, you can configure log forwarding from vRealize Log Insight to vRealize Log Insight Cloud without the need to deploy any additional Cloud Proxy. It is the Cloud Forwarding feature in Log Management, which makes it very easy to forward all or only selected log messages from your …

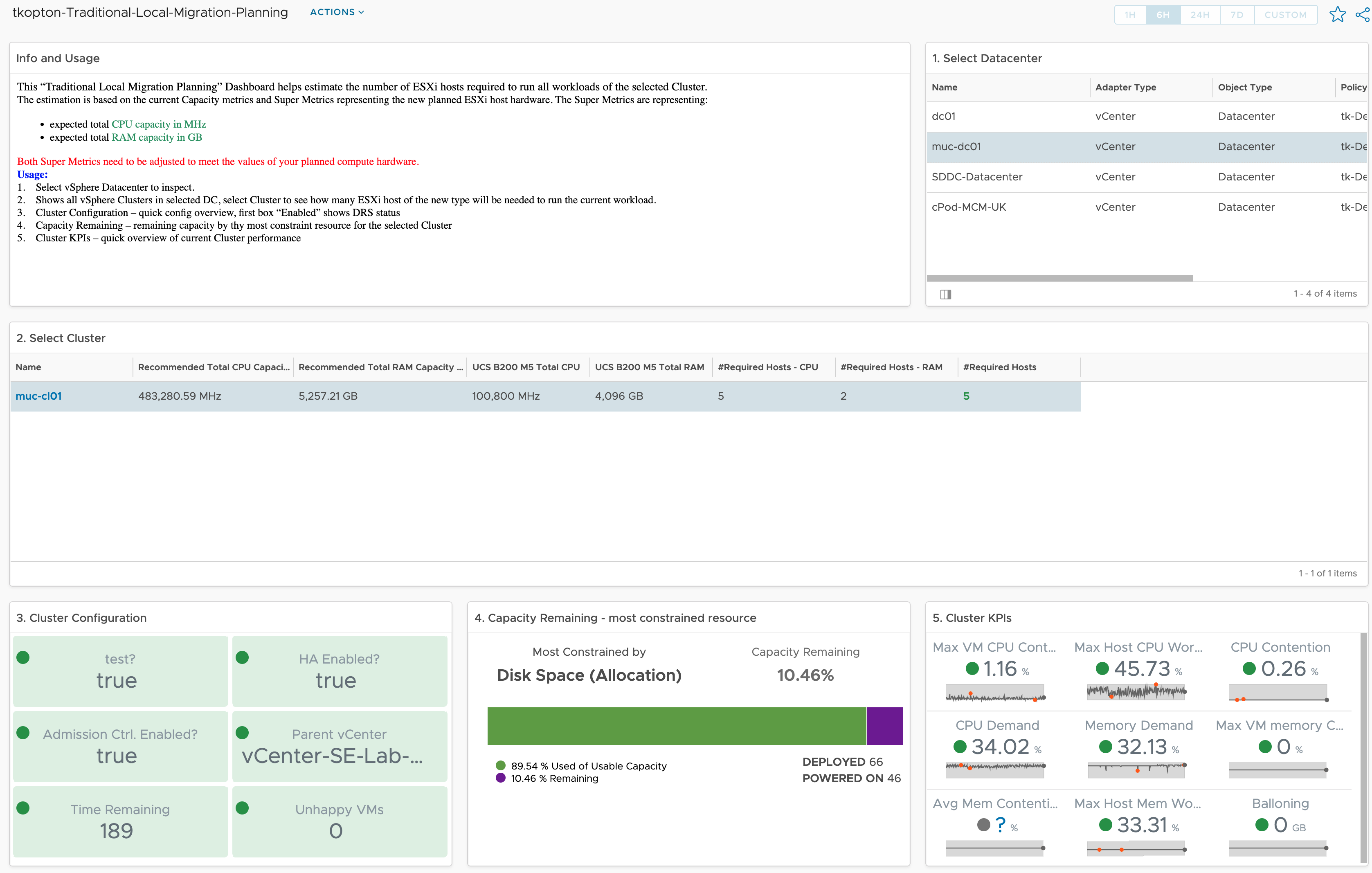

How vRealize Operations helps size new vSphere Clusters

In ESXi Cluster (non-HCI) Rightsizing using vRealize Operations I have described how to use vRealize Operations and the numbers calculated by the Capacity Engine to estimate the number of ESXi hosts which might be moved to other clusters or decommissioned. The corresponding dashboard is available via VMware Code. In this post, I describe the opposite …